Migrating from NGINX Ingress to Envoy Gateway (Gateway API): behind the scenes

Following the end of maintenance of the Ingress NGINX project, we have been working behind the scenes to migrate our customers' clusters from Kubernetes Ingress + NGINX Ingress Controller to Gateway API + Envoy Gateway.

This article covers the full picture: why we're making this move, why we chose Envoy Gateway over other implementations, how we're rolling it out across 300+ clusters in four phases, and what changes for you as a Qovery customer, including the advanced settings that behave differently between NGINX and Envoy.

This kind of migration is only successful if it is boring: predictable, measurable, and easy to roll back.

This is not a “fun refactor.” It is work we have to do because:

- Ingress NGINX is going end-of-maintenance in March 2026.

- We want to move to the Kubernetes API that is meant to replace Ingress: Gateway API.

The main requirement on our side is simple to state and hard to execute: customers should not see downtime during the switch.

Context: why we had to move

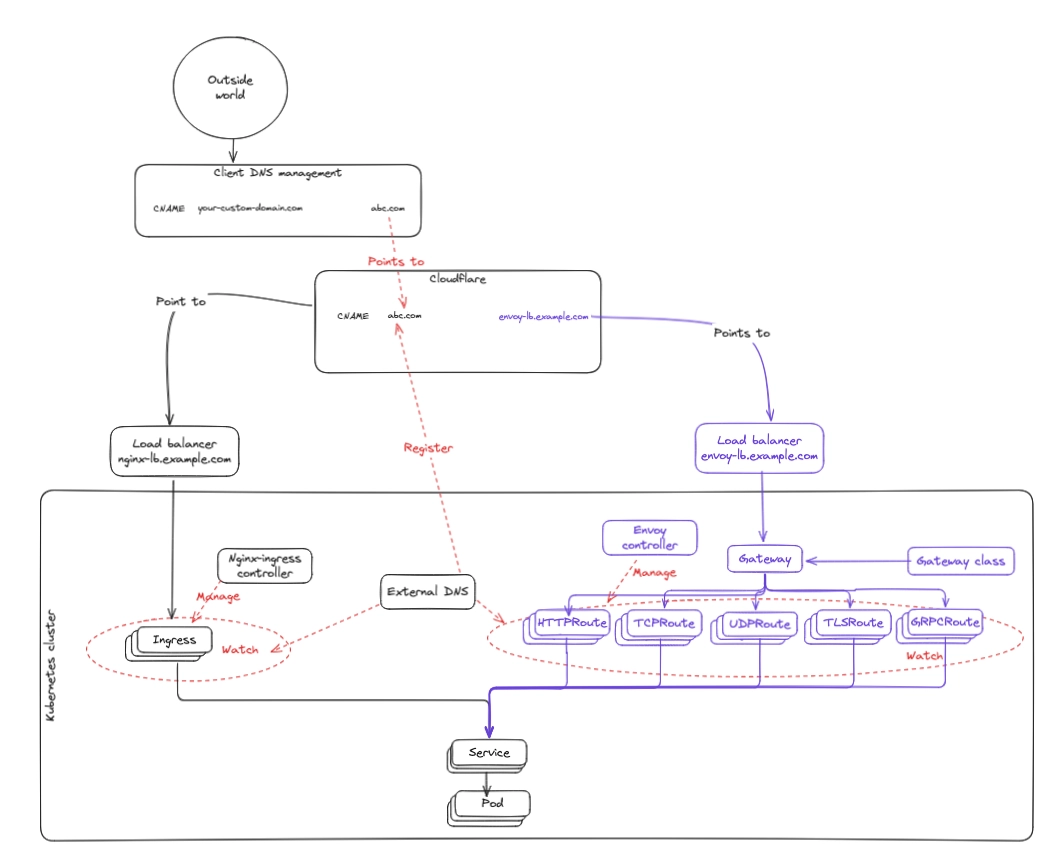

Since Qovery’s early days, we relied on Kubernetes Ingress resources and NGINX Ingress Controller to route traffic from the public internet to services running in customer clusters.

In late 2025 and early 2026, Kubernetes announced that the Ingress NGINX project would no longer be maintained starting March 2026 (announcement and statement). No maintenance means no security fixes and no evolution.

We could have treated this as “find another ingress controller and keep going.” Instead, we decided to use this deadline to finish a shift we already wanted to make: adopting the Kubernetes Gateway API (more info).

Why Gateway API instead of sticking with Ingress

Ingress has served the Kubernetes ecosystem well, but it has limits.

Gateway API is the community’s attempt to fix those limits without relying on controller-specific annotations:

- The Ingress API is effectively “feature complete” and will not be extended further.

- Ingress implementations depend heavily on annotations.

- Annotation-based configuration is hard to standardize across providers and controllers.

- The API surface is limited for modern traffic management needs.

If you want a deeper overview of the rationale, the Gateway API maintainers have an excellent guide: Migrating from Ingress to Gateway API.

Why we picked Envoy Gateway

We evaluated several Gateway API implementations. Envoy Gateway was the best fit for our constraints and what we want to support long term.

General reasons

- Gateway API-first: built to support Kubernetes Gateway API (GA), with more expressive routing and cleaner separation of roles than Ingress.

- Open source: part of the broader Envoy ecosystem.

- CNCF graduated: strong governance and long-term viability.

- Actively maintained.

- Performance: strong throughput and latency characteristics, especially under high concurrency.

- Cloud-native design: clear separation of control plane and data plane, lightweight, and well suited to multi-tenant environments.

Qovery-specific reasons

- Provider-agnostic: consistent configuration across providers, with fewer implementation-specific hacks.

- Extensibility and observability: Envoy is strong on telemetry and deep customization.

- Standardization and portability: the Gateway API reduces annotation sprawl and improves manifest portability.

- Unlocks traffic management features (over time), such as:

- Canary and blue-green rollouts.

- Weighted traffic shifting.

- Traffic mirroring.

- Traffic replay.

💡 If you run on Qovery, you will see these phases roll out progressively. If you do not, the rollout approach may still be useful if you need to swap your ingress layer without taking downtime.

Qovery’s migration goal (and constraints)

The goal is concrete: migrate all customer clusters (300+) from Ingress + NGINX to Gateway API + Envoy by the end of March 2026.

The constraints matter more than the architecture diagram:

- No downtime.

- No surprises. Customers should be able to validate behavior before the switch.

- Backwards compatibility during the transition. We cannot flip everything in one shot.

- Operational clarity. We need to see what is happening (logs, metrics, and failure modes) while two stacks coexist.

To get there, our work fell into three buckets:

- Selecting the best Gateway API-compatible replacement for NGINX Ingress Controller.

- Making the Qovery stack compatible with Envoy + Gateway API while keeping backwards compatibility during rollout.

- Designing a step-by-step migration path customers can follow safely.

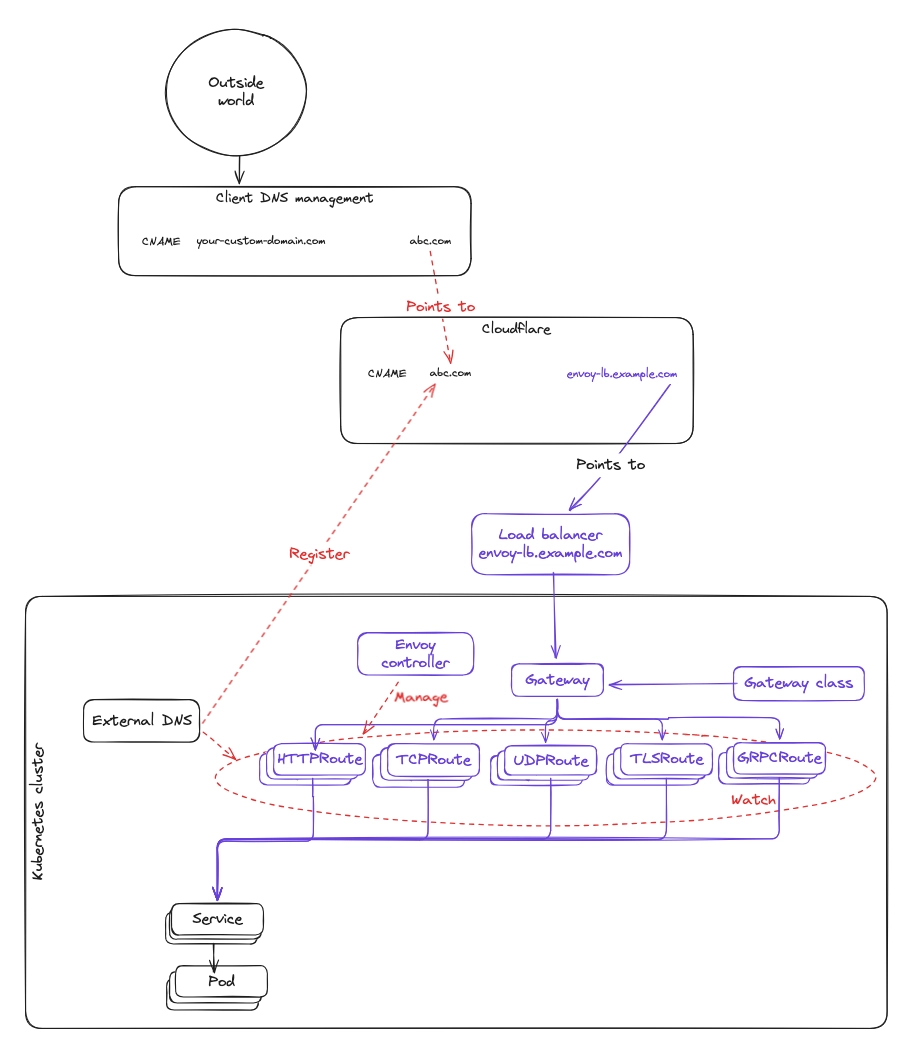

Target architecture

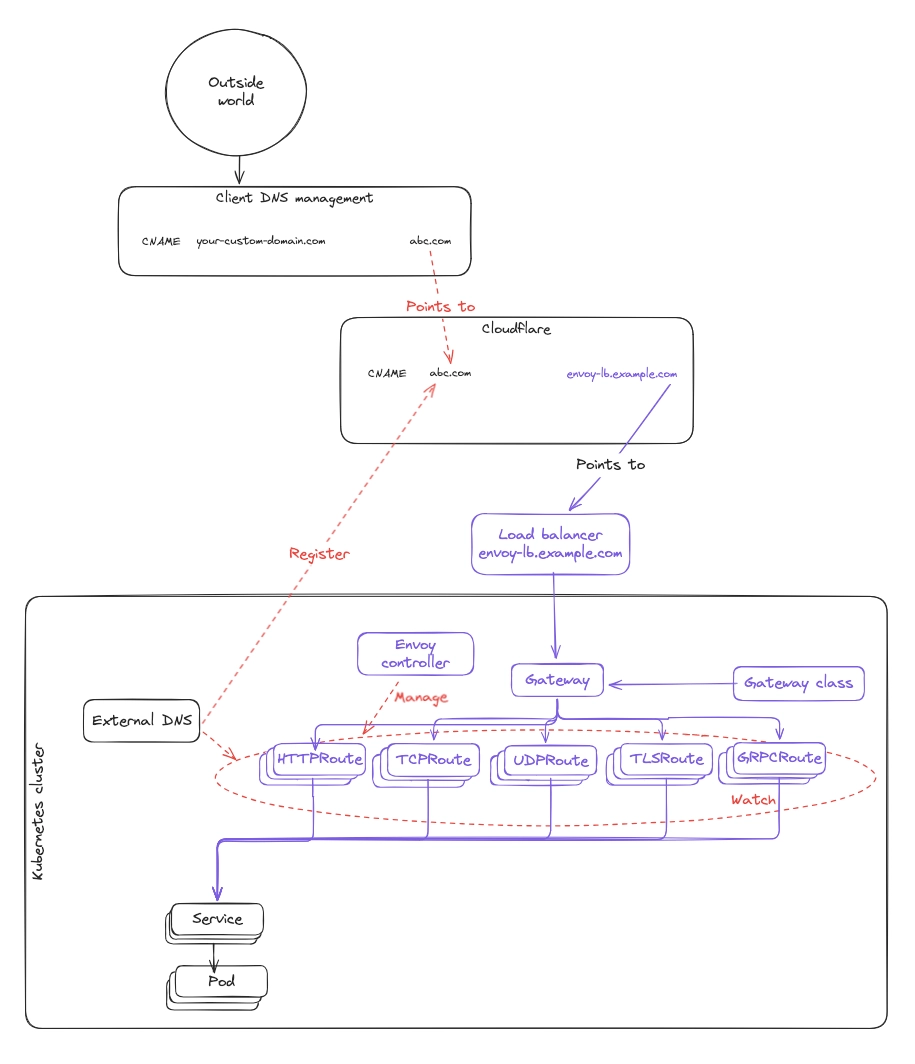

Here is the end state we are aiming for:

Qovery’s rollout strategy (4 phases)

Changing the edge routing layer changes how every application is reached. We are doing it progressively so problems show up early, when rollback is still easy.

The rollout is split into four phases:

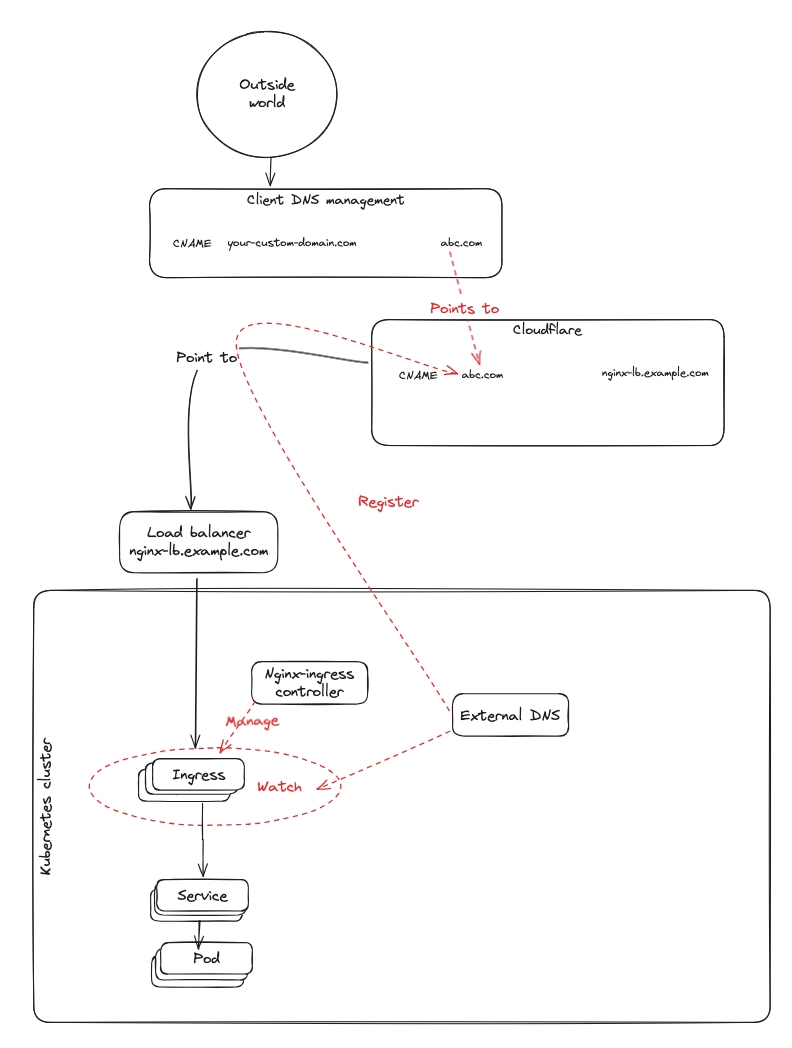

Phase 0: NGINX only (current default)

This is the starting point: NGINX Ingress Controller is installed and routing is configured through Ingress resources.

Phase 1: Deploy Gateway API + Envoy next to NGINX (shadow mode)

In this phase, we deploy the new stack alongside NGINX.

It is installed and configured, but it does not serve production traffic by default.

Everything new appears in purple in the diagram below.

What this introduces

- Envoy Gateway and Gateway API infrastructure.

- Services are also registered into the Gateway API stack.

What this changes for customers

- Services become accessible through a temporary CNAME for safe testing.

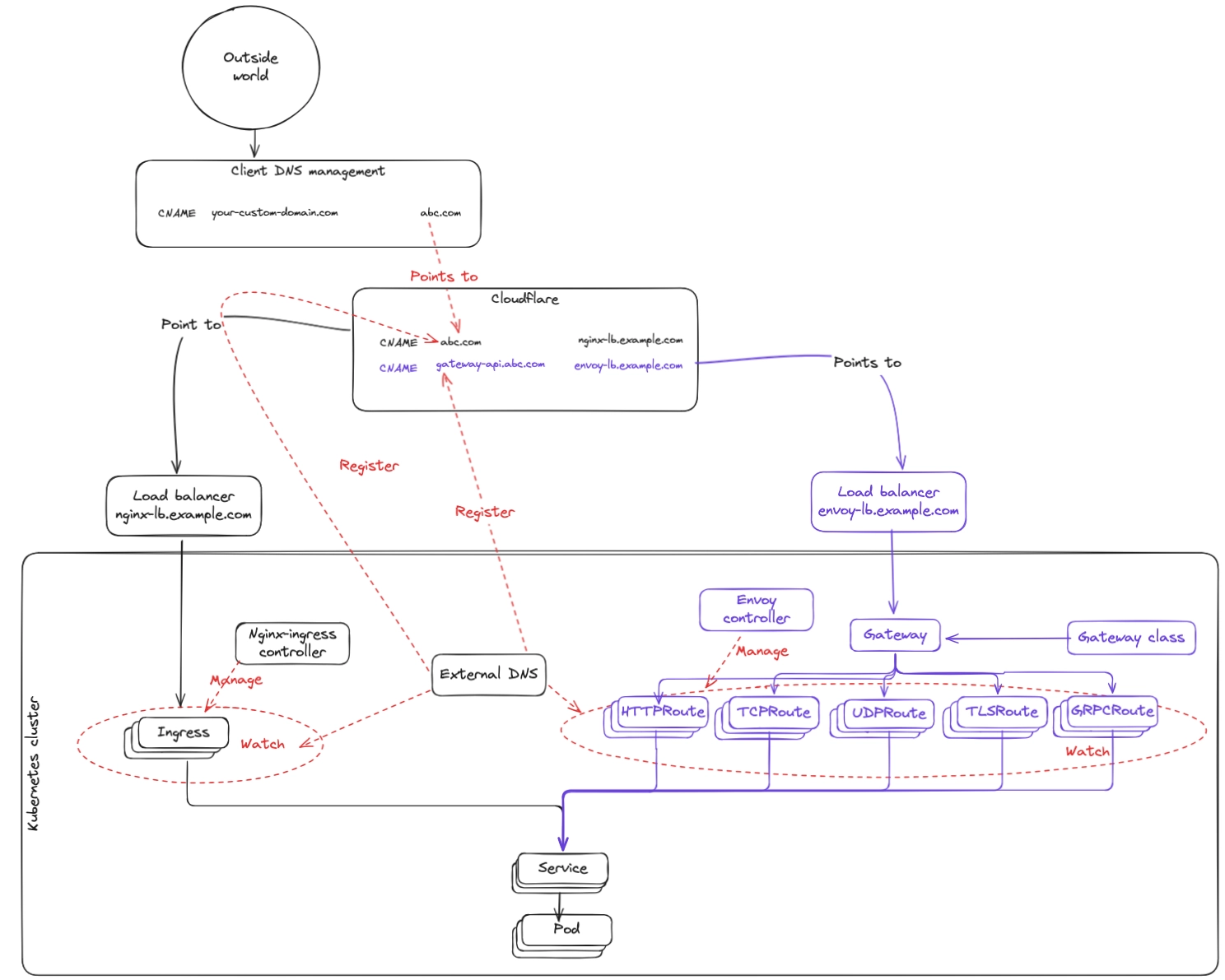

Phase 2: Gateway API + Envoy becomes the default routing path

This is the real switchover. Envoy Gateway becomes the default entry point and requests are served by Envoy.

The main CNAME is updated to point to the Load Balancer managed by the Gateway API stack.

We intentionally keep NGINX around during this phase because DNS caching means the transition can take 15 to 20 minutes (and sometimes longer depending on client configuration).

What this introduces

- Main CNAME updated to point to the Envoy + Gateway API Load Balancer.

What this changes for customers

- All domains (Qovery-provided domains and custom domains) are served through Gateway API + Envoy.

Phase 3: Remove the NGINX stack

Once we have confidence that traffic is fully served by Envoy, we remove the NGINX stack.

What this introduces

- NGINX components are removed from the cluster.

What this changes for customers

- No NGINX Load Balancer.

- No NGINX-specific configuration paths.

Advanced settings recap (NGINX → Envoy)

For most workloads, the migration is straightforward. But there are a few NGINX configuration (mapped as Qovery advanced settings) knobs where the model changes.

Sometimes there is a direct equivalent.

Sometimes there is not, because Envoy and NGINX solve the problem differently.

The tables in this article highlight the main differences, so you can review them before and during the rollout.

Our customers' migration plan

Here you can find the migration plan that we have prepared for our customers.

The migration implies to:

- Test how Gateway API behaves with your services using the Phase 1 temporary CNAME.

- Review service and cluster advanced settings in light of the differences above.

- Retrieve Envoy logs when debugging routing behavior.

- Monitor the dual-stack period (NGINX + Envoy) to validate the switch.

Suggested articles

.webp)

.svg)

.svg)

.svg)