Changelog

NGINX Ingress Controller end of maintenance - Need your action

Following NGINX Ingress Controller End of Maintenance by March 2026, Qovery is migrating from the NGINX Ingress Controller (Kubernetes Ingress) to Envoy Gateway (Kubernetes Gateway API).

This page is a customer-facing migration guide. It explains what will change, when it will change, and what we need you to do to ensure your services keep working as expected.

If you want the full technical background, read: Migration from Nginx to Envoy Gateway: behind the scene.

TL;DR

- Qovery is rolling out Envoy Gateway (Gateway API) on all clusters.

- During the transition, clusters will run a dual stack (NGINX + Envoy) to enable a no-downtime migration.

- Action required: once dual stack is enabled on your cluster, redeploy all exposed services so the routing configuration is generated for Gateway API.

- You can then test using the new dedicated service link and validate in Envoy logs.

- Target: Gateway API default by the end of March 2026, and NGINX removed shortly after.

⚠️ ⚠️ If you have Helm services that rely on Kubernetes Ingress resources, you must migrate them to support Gateway API. Ingress resources will not be supported once Gateway API becomes the default stack on Qovery.

Rollout plan

The goal is to complete the rollout by the end of March 2026, then decommission NGINX shortly after.

⚠️ ⚠️ Please pay attention to the points where your intervention is needed.

How to test the new Gateway API stack on your clusters

1) Enable the dual stack (Gateway API) on your cluster

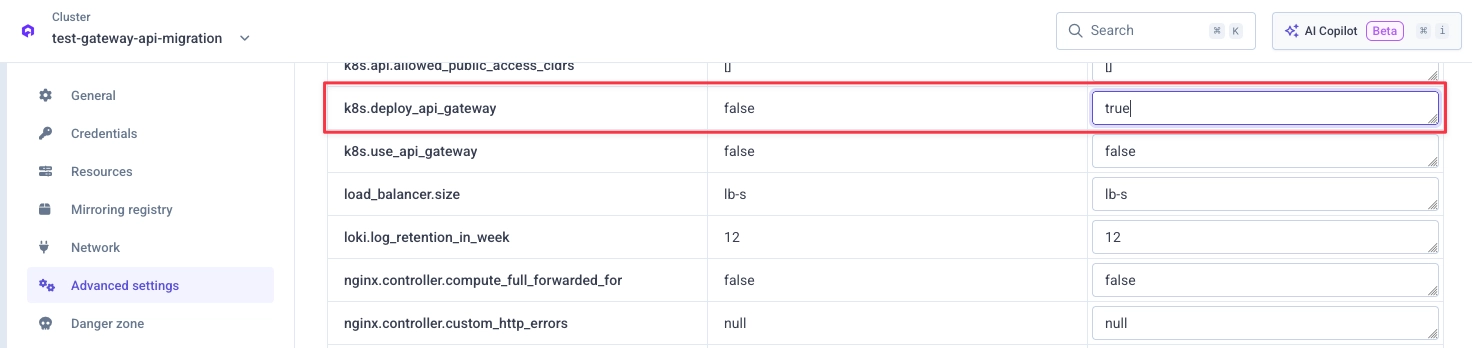

Go to your cluster advanced settings in the Qovery Console and set k8s.deploy_api_gateway to true:

Then redeploy your cluster.

2) Redeploy your exposed services

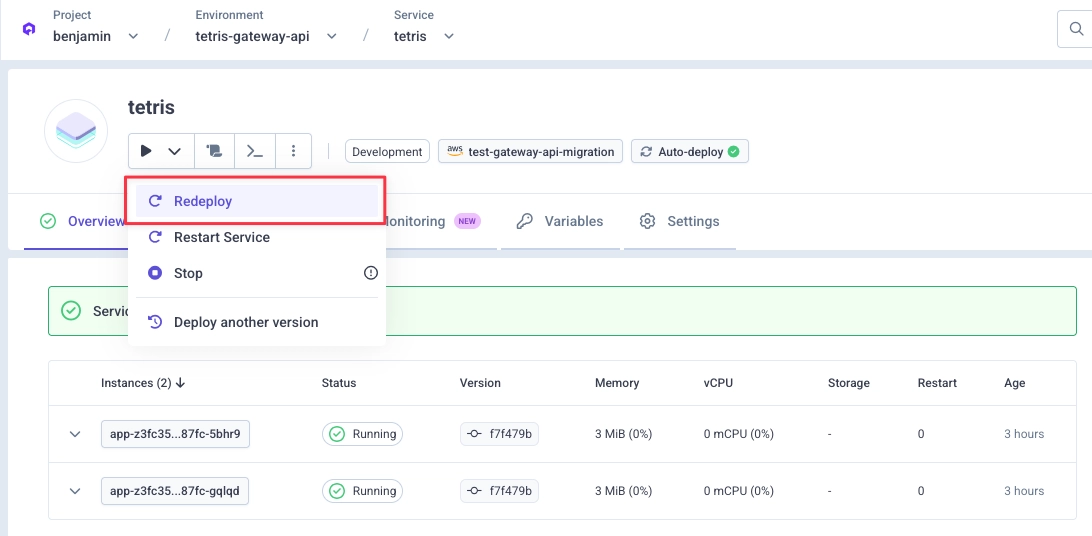

Once the Gateway API stack is deployed on your cluster, you must redeploy your existing exposed services.

This redeploy ensures your service is deployed with the Gateway API routing configuration so it can receive traffic coming from the new stack.

3) Test using the new dedicated service link

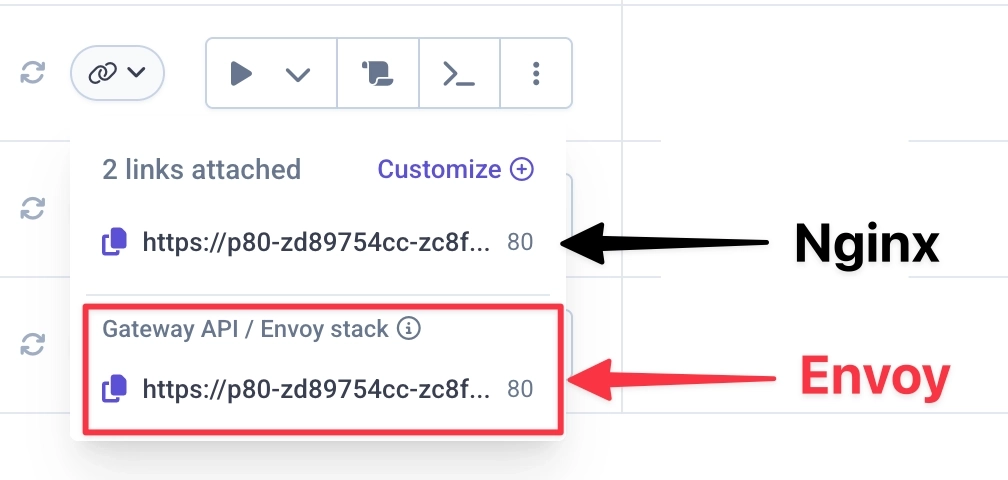

After redeploying, your service is ready to receive traffic from the new stack.

Start testing using the dedicated service link shown in the Qovery Console.

4) Validate via Envoy logs

Send a few requests to your service using the new service link.

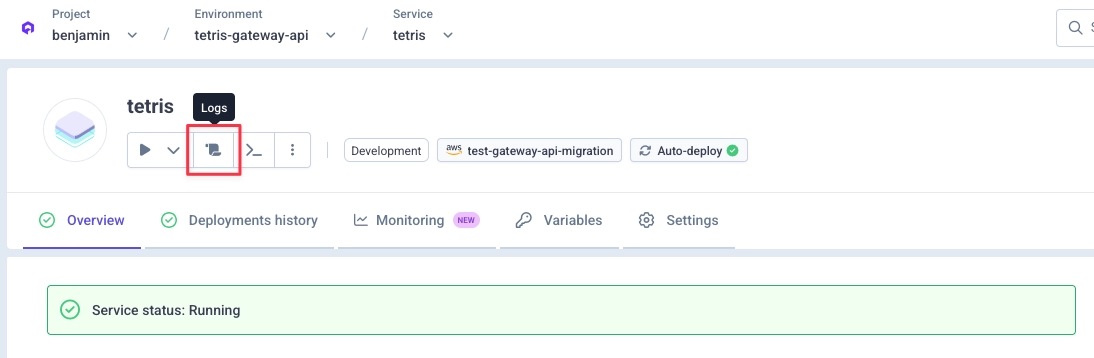

Then go to your service logs panel:

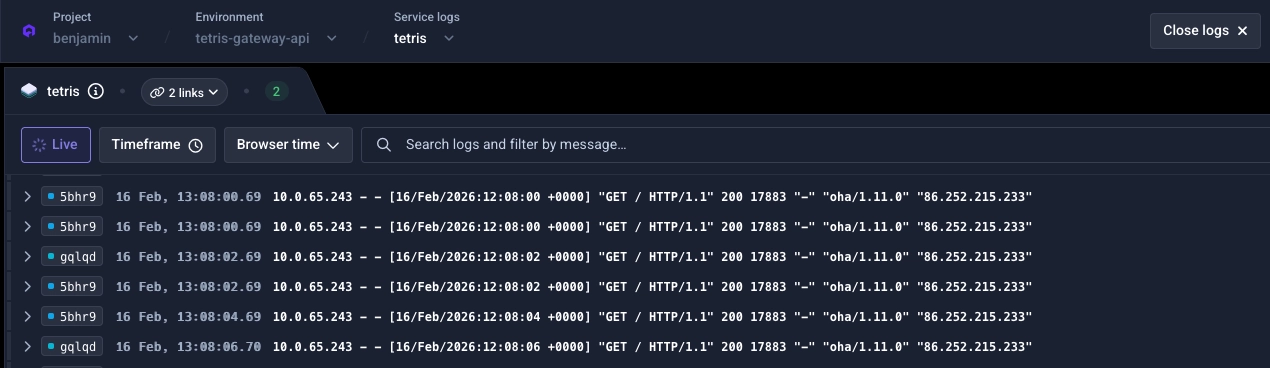

By default, you should only see your service logs:

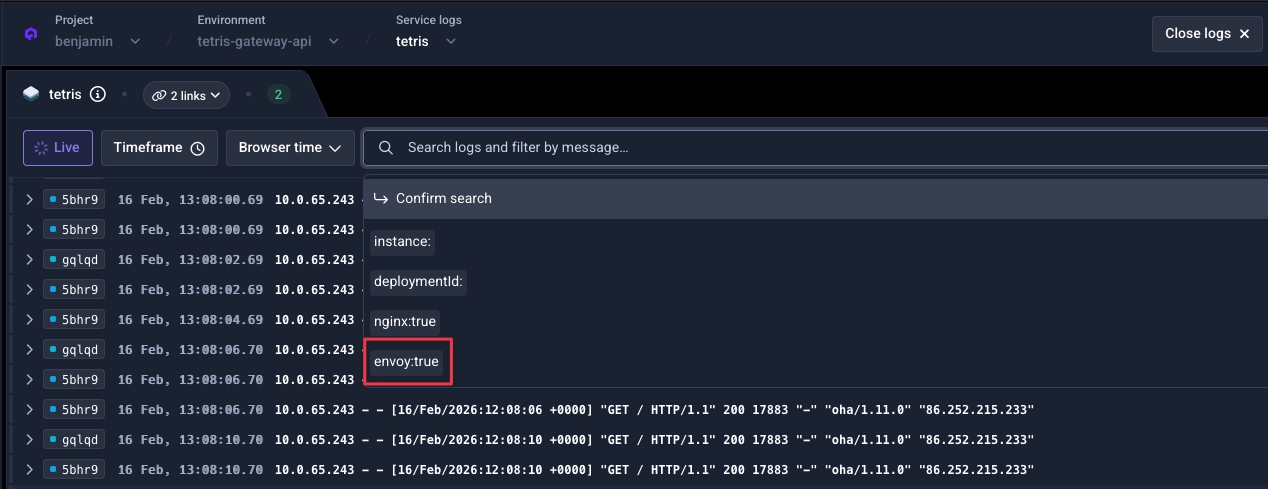

Select Envoy logs from the filters:

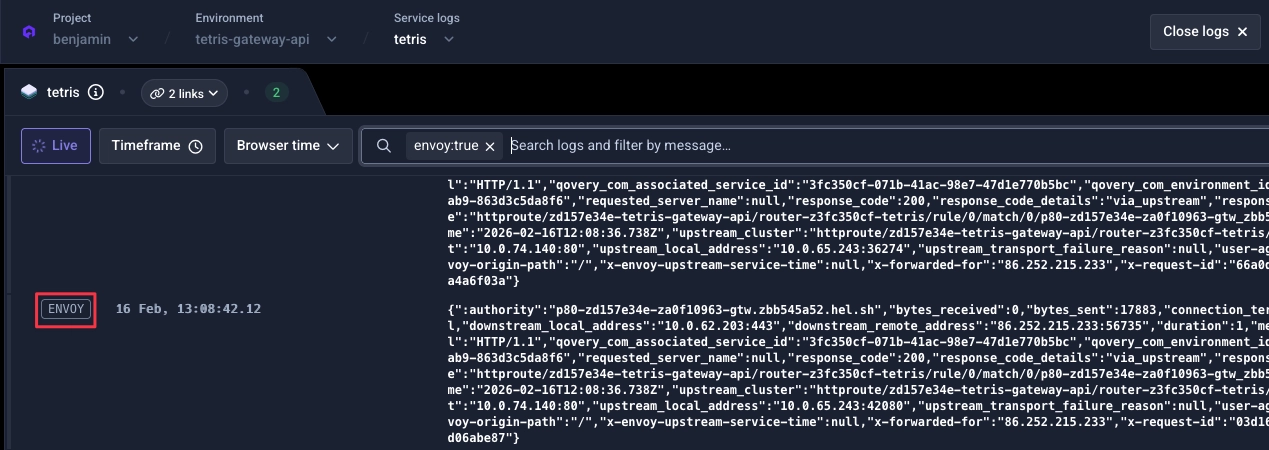

Validate the filter. You should now see Envoy logs for your service:

5) Bonus: Qovery Observability

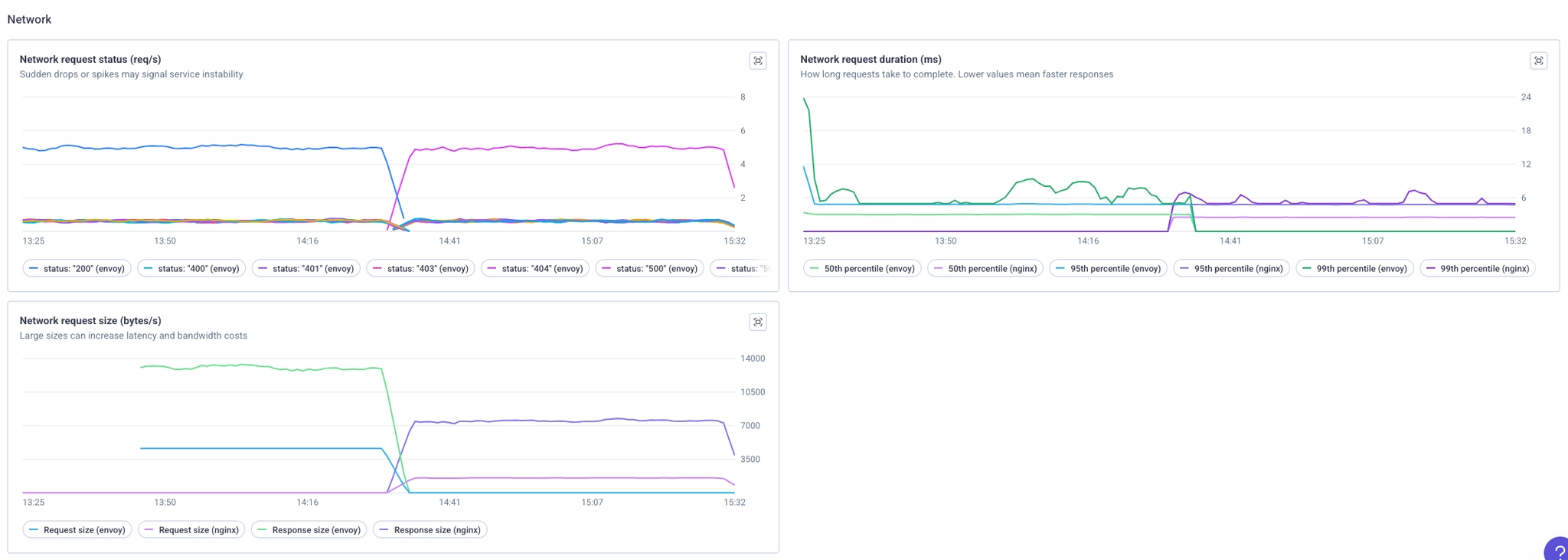

If you have the Qovery Observability stack, you can also see requests reaching Envoy and compare with NGINX.

Configuration: mapping from NGINX to Envoy

Where to configure

- Cluster level settings: Cluster advanced settings (Envoy section)

- Service level settings: Service advanced settings (prefixed with

network.gateway_api)

Most of the time there is an equivalence between NGINX and Envoy settings. For some settings there is no direct equivalent because they work differently.

Below are settings that behave differently, so you can review and tune them if needed.

Cluster:

Service:

💡 If you are missing something to mimic your legacy NGINX setup, please let us know so we can help and introduce the missing parts

If everything looks good, what next?

Once you have validated that your workloads behave correctly on the new stack, you can switch your cluster to use Gateway API by default.

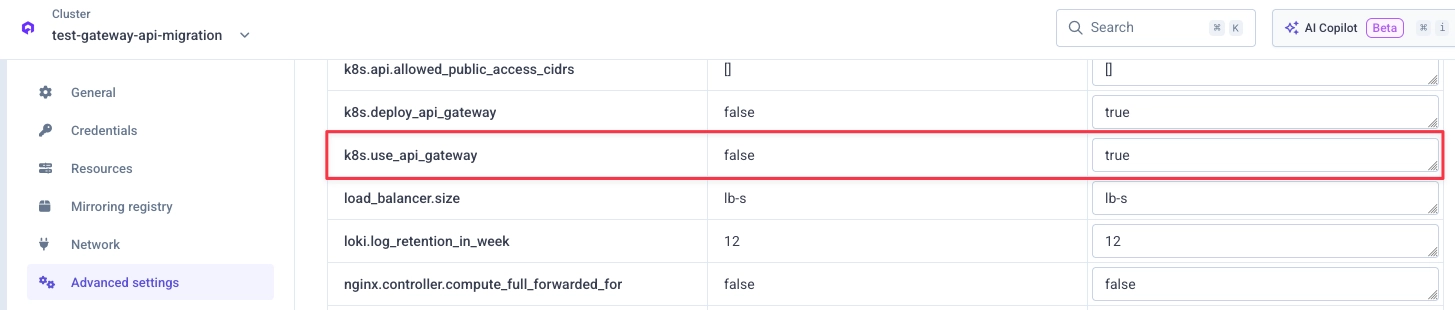

Go to your cluster advanced settings in the Qovery Console and set k8s.use_api_gateway to true:

k8s.use_api_gateway

Then redeploy your cluster.

FAQ

What do I need to do to make sure the new stack is compatible with my services?

Follow those steps. If anything is missing or you face any issue, please contact us.

Will my services be impacted during the NGINX to Envoy transition?

No downtime is expected.

Both stacks will remain available for a couple of weeks on your clusters. If DNS propagation is slow, it usually completes in less than 30 minutes, but this can vary depending on how HTTP clients are configured. If some traffic still reaches the old load balancer during the transition, it will continue to be handled by NGINX.

Why is the NGINX controller still on my cluster?

NGINX and its artifacts will remain on your clusters for a couple of weeks as a safety net. It stays active during the switch to ensure a no-downtime migration to Gateway API.

Share on :

.webp)

.svg)

.svg)

.svg)