When Kubernetes Becomes the Bottleneck, and How to Fix It

Key points:

- The "Human Bottleneck" is the Real Scaling Killer: While technical issues like etcd latency or resource exhaustion exist, the most significant delays stem from "Ticket-Ops." When developers must wait for a platform team to approve YAML changes or update Helm charts, delivery speed slows down linearly as the organization grows.

- Technical Tuning Has Diminishing Returns: Optimizing the API server, implementing Horizontal Pod Autoscaling (HPA), and reducing container image sizes are necessary for cluster health. However, these fixes don't address the underlying workflow friction of developers lacking autonomy over their own infrastructure needs.

- Self-Service is the Strategic Solution: To truly eliminate bottlenecks, organizations must decouple developer intent from Kubernetes complexity. By using Qovery, a Kubernetes management platform, teams can automate manifest generation and environment provisioning, allowing developers to deploy within secure guardrails without manual intervention.

Most companies adopt Kubernetes to accelerate delivery. The initial migration succeeds, but as the organization grows, a deployment pipeline that used to take five minutes can quickly turn into a forty-five-minute wait.

The cluster itself is rarely the problem. While Kubernetes excels at container orchestration at scale, it does not handle the configuration sprawl, approval gates, and "ticket-ops" that turn a small platform team into a human bottleneck between developers and deployments.

Fixing this requires diagnosing where the true bottleneck lives. While some constraints are technical and require cluster tuning, the most critical blockers are workflow problems that no amount of API server optimization will solve.

Diagnosing Technical Bottlenecks

Before addressing organizational issues, it is worth confirming that the cluster itself is not the constraint. Technical bottlenecks in Kubernetes are real, measurable, and fixable with infrastructure changes.

1. Control Plane Latency

The Kubernetes API server and etcd form the coordination layer for every operation. When etcd latency rises, pod scheduling slows down, deployment queues grow, and kubectl commands hang. This is most commonly caused by insufficient disk I/O, too many controllers creating excessive load on the API server, or large ConfigMaps and Secrets that inflate snapshot sizes.

2. Resource Exhaustion

When a deployment encounters a "No node available" error, it halts entirely. This happens when no node in the cluster has enough CPU, memory, or GPU capacity to satisfy the request. Even with autoscaling, waiting 3-5 minutes for new instances to spin up compounds into massive delays when multiple teams deploy simultaneously. Additionally, namespaces with strict hard quotas will reject deployments outright, often requiring a platform engineer to manually adjust limits.

3. CI/CD Queue Jams

Complex Helm charts and large container images turn CI/CD pipelines into queuing systems. A Helm chart with dozens of subcharts and conditional templates can take minutes to render before deployment even begins. When multiple teams deploy concurrently, shared Jenkins or GitLab runners become overwhelmed, forcing pipelines to sit idle in a queue.

Diagnosing Workflow Bottlenecks

Technical tuning improves cluster performance. It does not fix the fact that a developer needs to wait for platform engineers to update cluster configurations or debug pods. The most impactful bottlenecks in Kubernetes environments are human bottlenecks, and they compound as organizations grow.

1. The Ticket Queue

When a developer finishes a feature that needs infrastructure change or network configuration update, they need this change to be routed through the platform team to validate and deploy change.

The platform team is handling requests from multiple teams at the same time, which makes the change potentially stuck in queue for days, until it is picked up and taken care of. This increases the time to delivery for the whole organization, as platformers become bottleneck.

2. Configuration Complexity

Kubernetes configuration is powerful and verbose, as a single service deployment involves many manifested and resource configurations. Each of these resources has fields that interact with others in non-obvious ways that only experienced operators would know.

Developers who work in this configuration daily internalize the relationships between these resources, most engineers don’t know the intricacies of the platform they deploy on. They depend on the platform team to debug issues, fix the YAML, and finish the deployment.

As clusters grow in complexity, the number of people who understand the full deployment environment shrinks. This knowledge concentration is the most dangerous bottleneck because it is invisible until someone goes on vacation or leaves the company.

How to Fix It

The bottlenecks above fall into two categories: infrastructure constraints that require cluster tuning, and workflow constraints that require a different operating model.

1. Technical Fixes

Horizontal Pod Autoscaling (HPA) addresses resource exhaustion by scaling workloads based on CPU, memory, or custom metrics. Instead of provisioning for peak load at all times, HPA adjusts replica counts dynamically, reducing the likelihood that a deployment fails because resources are unavailable.

Cluster Autoscaler complements this by adding or removing nodes based on pending pod demand, eliminating the manual provisioning step that creates delays.

Optimizing container build sizes reduces both CI/CD queue time and deployment speed. Multi-stage Docker builds that produce minimal runtime images cut image pull times from minutes to seconds. Optimized caching in the build pipeline avoids re-downloading unchanged dependencies.

These optimizations are incremental but cumulative, incremental improvements per deployment compound to house of CI/CD time per day.

These fixes matter. They make the cluster faster and more reliable. They do not change the fact that a developer still needs to file a ticket to mount a volume, deploy a database, or change network configurations.

2. The Strategic Fix: Self-Service Kubernetes

To remove the workflow bottleneck, you need a platform layer that lets developers express their intent (e.g., "add a database to staging") without writing or understanding complex Kubernetes manifests.

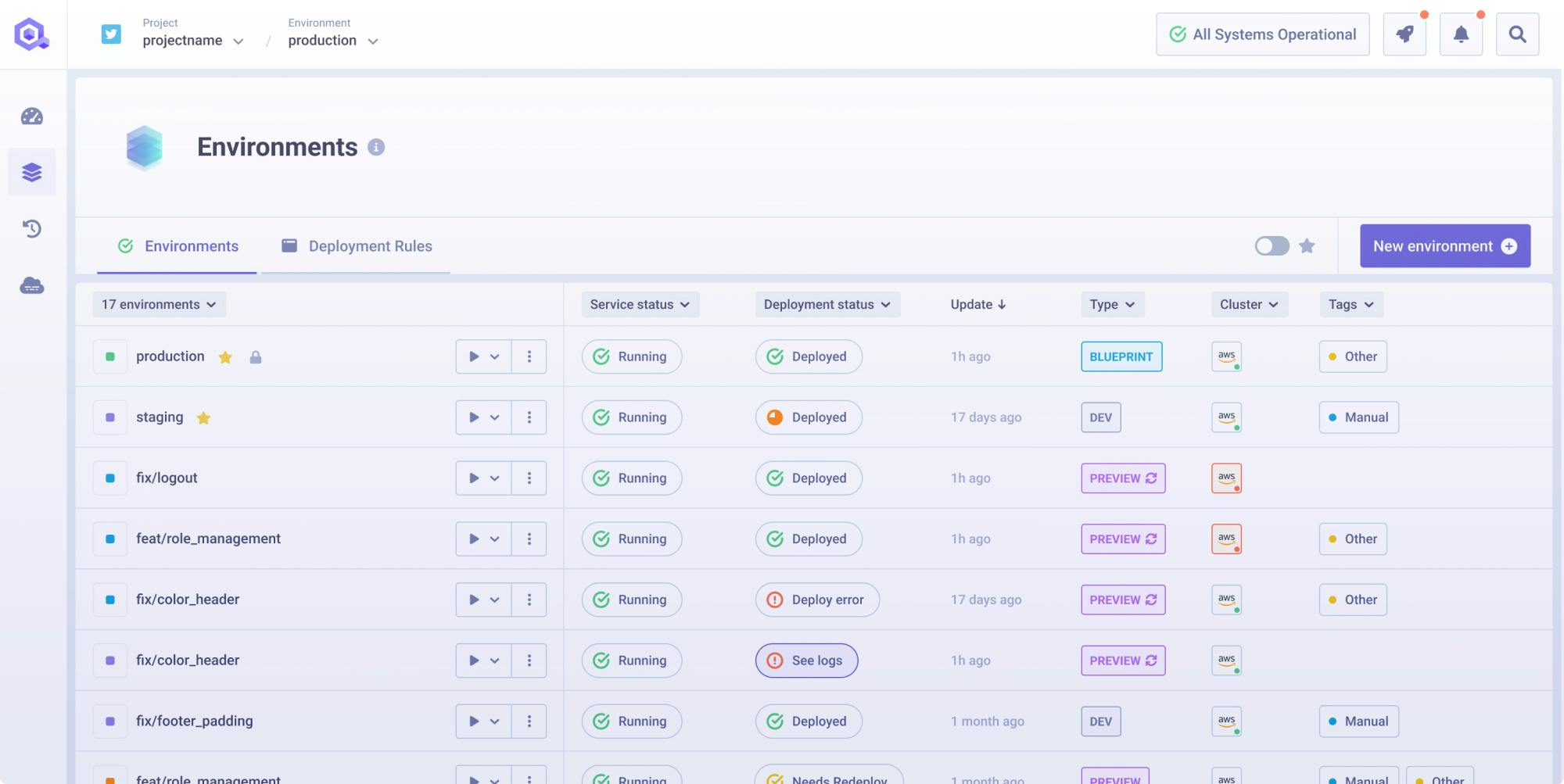

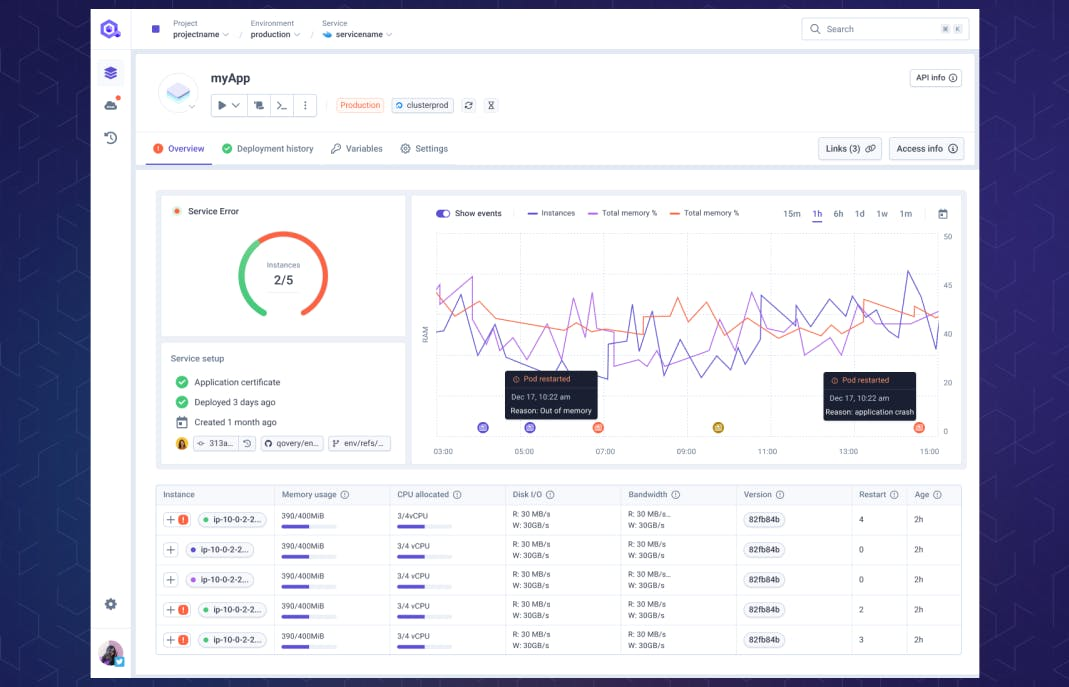

Qovery is a Kubernetes management platform that provides this layer. Developers deploy applications through Qovery's interface, and the platform generates the Deployment, Service, Ingress, and associated resources. The manifests are correct by construction because Qovery produces them from tested templates with sane defaults, not from hand-edited YAML. The dependency on the platform team for routine changes disappears, as the routine changes are handled by the platform itself.

The self-service model extends to infrastructure provisioning. Developers can provision databases, configure environment variables, and create ephemeral environments through Qovery without creating a ticket for the operators team.

The platform enforces guardrails set by the platform team: resource limits, allowed instance types, network policies, and access controls. Developers get autonomy within boundaries. Platform engineers define the boundaries once instead of executing each change manually.

Qovery also addresses the observability gap that drives "Why is my build failing?" messages to the platform team. The platform provides a clear UI for deployment logs, build status, and service health. Developers can see exactly where a deployment failed, whether it was a build error, a scheduling issue, or a health check timeout, without needing to run `kubectl describe pod` or parse events from a shared cluster.

This visibility eliminates a significant category of support requests that the platform team currently handles manually.

For organizations where Kubernetes management has become an operational burden rather than a competitive advantage, the shift from ticket-ops to self-service is the highest-leverage change available. It does not replace the need for technical tuning, but it addresses the bottleneck that technical tuning cannot reach.

Stop Tuning Clusters, Start Empowering Developers

A slow Kubernetes environment usually points to a slow workflow, not a struggling cluster. While your API server responds in milliseconds, developers often wait days for manual approvals or YAML fixes. That gap is where delivery speed dies.

Technical fixes like autoscaling and etcd tuning are necessary to keep the infrastructure responsive, but they cannot solve human bottlenecks. True scalability requires eliminating the manual coordination needed to translate a developer's intent into Kubernetes configuration.

Qovery removes this friction entirely. By automating manifest generation and infrastructure provisioning, Qovery turns Kubernetes into a self-service system. Developers deploy independently within secure, platform-defined guardrails, instantly eliminating the dependency on platform teams for routine operations.

The result is an operating model that transforms your deployment environment from an operational burden into a competitive advantage.

Suggested articles

.webp)

.svg)

.svg)

.svg)