Kubernetes Management: Best Practices & Tools for Managing Clusters and Optimizing Costs

Key Points:

- Effective Kubernetes management requires strict adherence to best practices in Security, Reliability, and Efficiency.

- Success depends on strategic cluster design, adopting GitOps for configuration control, enforcing governance via policies, and leveraging comprehensive autoscaling strategies.

- Managing costs is a core task achieved through right-sizing resources, effective use of autoscaling and cloud discounts, and implementing FinOps principles for visibility and accountability.

As Kubernetes adoption soars, so does its inherent complexity. Effective management, encompassing deployment, security, scaling, monitoring, and cloud cost optimization, requires significant expertise.

Without a focused strategy, teams face operational overhead, security risks, and escalating cloud bills. Kubernetes management platforms address these challenges by automating complex tasks and providing unified interfaces for cluster operations. These tools enable teams to leverage Kubernetes capabilities without the traditional operational burden.

In this article, we’ll dive into the challenges of Kubernetes management, best practices, and tools to manage clusters and optimize the costs for an engineering organization.

Understanding Kubernetes Management

Kubernetes management, on top of application deployment, includes cluster provisioning, security policy enforcement, resource optimization, monitoring setup, and ongoing maintenance. Effective management ensures stability, security, and performance across all cluster operations.

Organizations must manage multiple concerns simultaneously. Security requires implementing RBAC policies, network segmentation, and secrets management. Reliability demands health checks, backup procedures, and disaster recovery plans. Efficiency needs resource allocation, autoscaling configuration, and namespace organization.

Operational excellence requires monitoring systems, logging infrastructure, version control practices, and regular upgrade procedures. These responsibilities create a significant burden for operating teams and organizations to maintain the quality of their production environment.

Best Practices for Kubernetes Management

1. Security

RBAC Implementation

Role-Based Access Control limits user permissions to necessary operations only. Teams should define roles matching organizational responsibilities and assign them to users or service accounts. Regular RBAC audits identify overly permissive policies, principle of least privilege should be used to fix permissions forward.

Network Policies

Network policies control traffic between pods and external services. Implementing default-deny policies ensures only explicitly allowed communication occurs. This reduces attack surface and contains potential security breaches.

Secrets Management

Kubernetes Secrets store sensitive information like passwords and API keys for applications to use during runtime. External secrets management tools like HashiCorp Vault or AWS Secrets Manager provide additional security. Secrets should always be stored on one of those systems and never committed to version control systems.

Image Scanning

Container image scanning identifies vulnerabilities before deployment. Tools like Trivy can scan images during a CI/CD pipeline and ensure quality ahead of releases. Automated scanning prevents vulnerable containers from reaching production.

2. Reliability

Liveness and Readiness Probes

Health checks ensure Kubernetes routes traffic only to healthy pods. Liveness probes restart unhealthy containers, while readiness probes prevent traffic to pods not ready to serve requests.

Immutable Infrastructure

Treating infrastructure as immutable improves reliability and simplifies rollbacks. Teams deploy new versions rather than modifying existing resources. This approach reduces configuration drift and deployment failures.

Backup and Disaster Recovery

Regular backups of cluster state and persistent data enable recovery from failures. Tested disaster recovery plans ensure business continuity in case of infrastructure or service failure and build confidence and efficiency for operating engineering teams in the long run.

3. Efficiency

Resource requests and limits

Accurate resource specifications ensure efficient cluster utilization. Defining requests guarantees minimum resource allocation, while limits prevent resource overconsumption. Monitoring actual usage is key to defining sensible configuration matching the application requirements.

Namespaces

Namespaces provide logical separation between teams, applications, or environments. They enable resource quotas, network policies, and RBAC at the namespace level. Proper namespace design improves organization and security, letting organizations build logical spaces for their applications and teams to run.

Autoscaling

It is important to implement the various autoscaling strategies available to Kubernetes clusters. Horizontal Pod Autoscaler adjusts replica counts based on metrics like CPU or memory. Cluster Autoscaler adds or removes nodes based on pending pods. Vertical Pod Autoscaler adjusts resource requests automatically. All three have their role in managing applications performance and cost effectively.

4. Operations

Monitoring and Logging

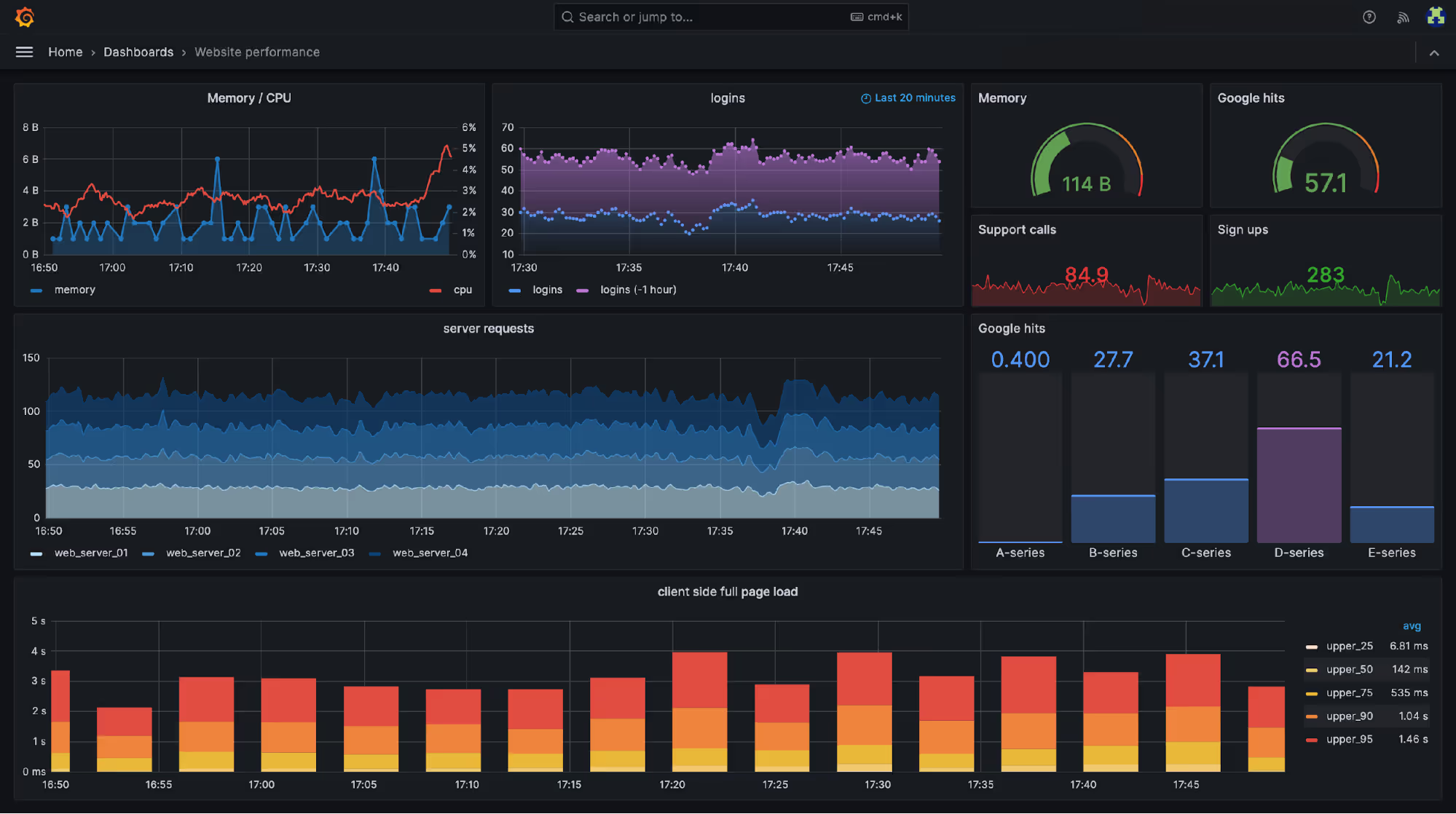

Comprehensive monitoring provides visibility into cluster and application health. Solutions like Prometheus collect metrics, while Grafana helps with visualizing data. Centralized logging through tools like ELK Stack enables troubleshooting for application engineers.

Version Control with GitOps

GitOps treats Git repositories as the source of truth for infrastructure configuration. Tools like Argo CD or Flux automatically sync cluster states with repository content. This approach improves auditability and rollback capabilities.

Regular Upgrades

Kubernetes releases new versions regularly with security patches and features. Organizations should establish upgrade procedures and test processes, as staying current reduces security risks and technical debt.

Strategic Considerations for Kubernetes Clusters

1. Cluster Design

Single vs. Multi-Cluster

Single clusters simplify management but create potential single points of failure, while multi-cluster architectures improve isolation, enable regional deployments, and support different environments. The choice depends on organizational requirements and operational capacity, as maintaining multiple clusters implies a bigger operational burden.

Multi-Tenancy Strategies

Soft multi-tenancy uses namespaces to separate teams or applications within clusters, while hard multi-tenancy provides stronger isolation through separate clusters. Organizations must balance isolation requirements against operational complexity.

2. Scaling Strategies

Horizontal Pod Autoscaler

HPA scales application replicas based on observed metrics. It responds to traffic changes automatically, maintaining performance while optimizing costs. Custom metrics enable sophisticated scaling decisions.

Cluster Autoscaler

Cluster Autoscaler adds nodes when pods cannot schedule due to insufficient resources. It removes underutilized nodes to reduce costs. Configuration requires balancing responsiveness against cost optimization.

Vertical Pod Autoscaler

VPA adjusts resource requests based on observed usage patterns. It right-sizes applications automatically, improving efficiency. VPA works best for applications with predictable resource requirements.

3. Governance and Policy

Standardizing deployments ensures consistency and reduces errors. Policy enforcement tools like OPA Gatekeeper validate resource configurations before creation. Automated policy checks prevent non-compliant deployments.

Automation

CI/CD pipelines automate application development, build, testing, and deployment. Infrastructure as Code tools like Terraform manage cluster provisioning. Automations are critical to reduce manual errors and improve deployment velocity for engineering organizations.

Tools for Managing Kubernetes Clusters

1. Core Kubernetes Tools

`kubectl` provides command-line access to Kubernetes APIs while the Kubernetes Dashboard offers web-based cluster visualization and management. These native tools form the foundation for cluster operations, maintenance, and visibility.

2. CLI/UI Enhancements

Multiple tools help visualize the state of your Kubernetes clusters. K9s provide a terminal-based UI for managing clusters, while Lens offers a desktop application with integrated monitoring and troubleshooting. These tools improve operational efficiency through better interfaces.

3. Package Management and GitOps

Helm standardizes application packaging and deployment. Argo CD and Flux enable GitOps workflows with automatic synchronization. These tools simplify application management and deployment automation.

4. Monitoring and Observability

Tools like Prometheus collect time series metrics from clusters and applications. Grafana visualizes metrics through dashboards with powerful customization. ELK Stack provides centralized logging and analysis capabilities.

5. Cloud-Specific Managed Services

EKS, AKS, and GKE provide managed Kubernetes control planes. These services reduce operational burden by handling control plane upgrades and availability. Organizations still manage worker nodes and application lifecycles on their own.

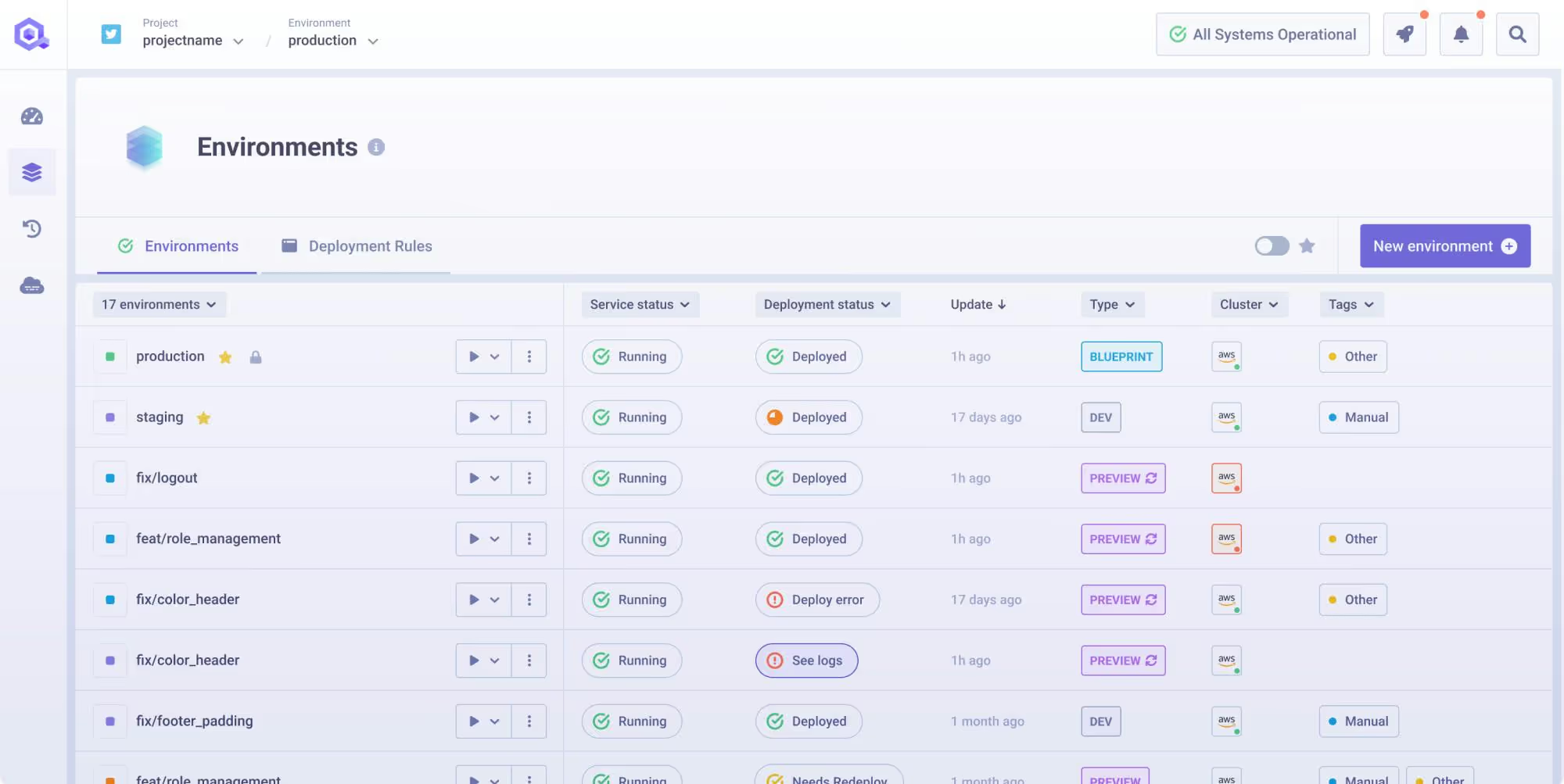

6. Unified Management Platforms

Platforms like Qovery simplify cluster management by providing integrated interfaces for deployment, monitoring, and cost optimization. It abstracts Kubernetes complexity by automating the deployment and infrastructure management while maintaining access to all its powerful feature, letting teams fully benefit from the platform without needing deep expertise.

Kubernetes Cost Optimization Strategies

Kubernetes cost optimization challenges stem from resource over-provisioning, inefficient scheduling, and lack of visibility. Engineering organizations often struggle to allocate costs accurately or identify optimization opportunities when running complex Kubernetes deployments.

1. Right-Sizing Resources

Setting accurate requests and limits prevents resource waste. Teams should monitor actual usage and adjust specifications accordingly. It is important to monitor application’s behaviors, as under-provisioned applications face performance issues, while over-provisioned applications waste resources.

2. Leveraging Autoscaling

Autoscaling matches resources to demand automatically. HPA scales applications based on load, while Cluster Autoscaler adjusts node count. Proper autoscaling configuration significantly reduces costs.

3. Spot instances and savings plans

Cloud providers offer discounted compute through spot instances and savings plans. Spot instances work well for fault-tolerant workloads, while savings plans reduce costs for predictable usage patterns.

4. Storage Optimization

Choosing appropriate storage tiers balances performance and cost. Frequently accessed data requires fast storage, while archives use cheaper options. Regular cleanup of unused persistent volumes reduces costs.

5. Monitoring and Visibility

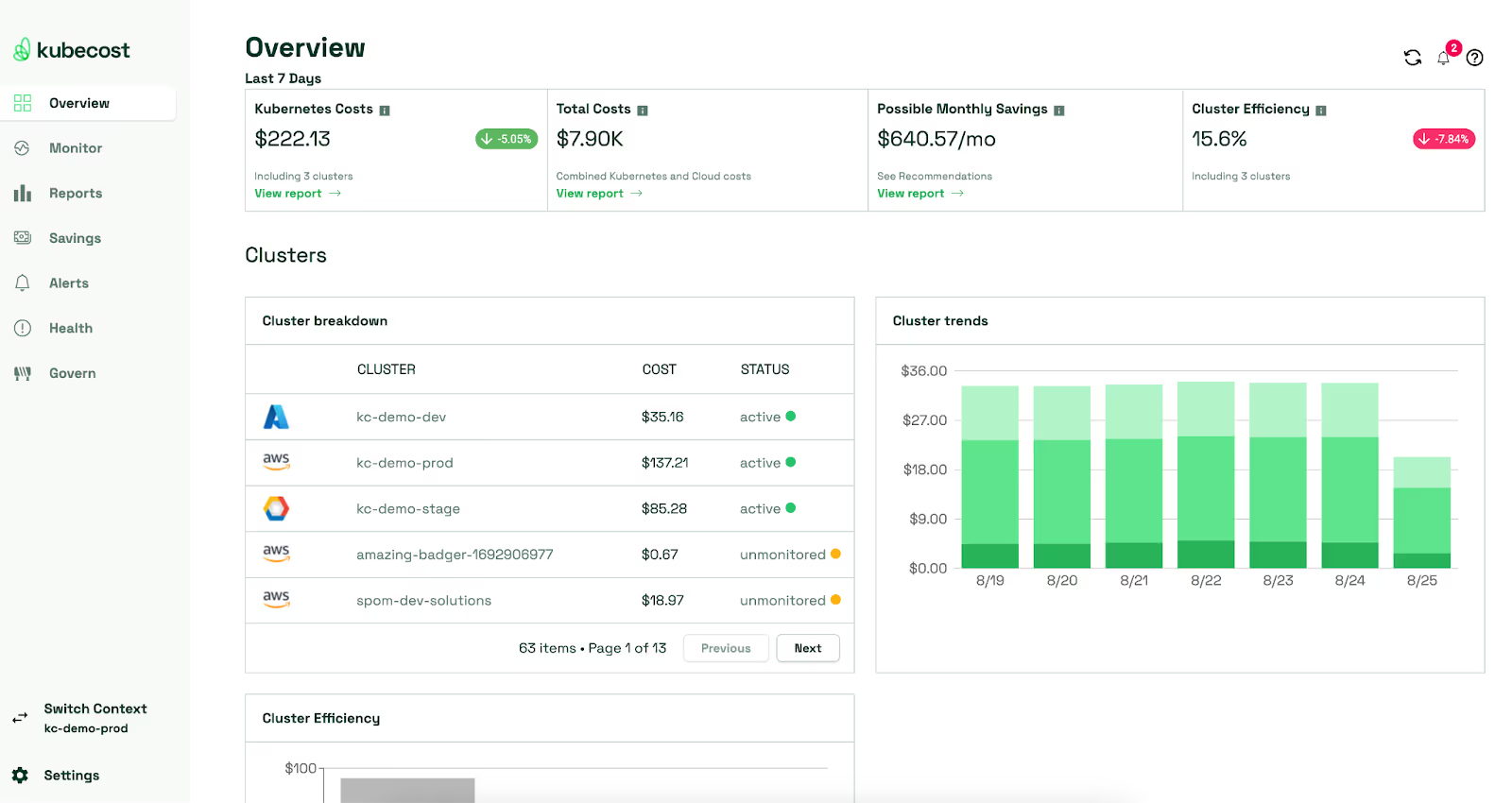

Cost monitoring tools identify idle or underutilized resources. Regular reviews uncover optimization opportunities and limit cloud sprawl. Detailed cost allocation enables team accountability and informed decision-making when managing their resource and application deployments.

6. FinOps Principles

FinOps makes teams gain visibility into costs and understand their spending impact on the company globally. This cultural shift improves cost optimization outcomes by bringing awareness and accountability of the costs to the operating teams.

7. Cost Optimization Tools

Tools like Kubecost provide Kubernetes-specific cost visibility and optimization recommendations, they are natively supported by the platform and are usable by any cluster operator. CloudZero is a cost optimization platform that automates the analysis of your infrastructure to detect cost savings opportunities. PerfectScale automates the right-sizing of your cluster. Qovery simplifies the integration of cost-management tooling into your cluster and helps bring visibility into its unified management interface.

Qovery: Simplifying Kubernetes Management and Cost

Qovery addresses common Kubernetes management challenges through automation and simplified interfaces. The platform is an automated Kubernetes deployment tool that reduces operational complexity while maintaining Kubernetes capabilities.

The platform handles cluster provisioning, security configuration, and monitoring setup automatically. Teams deploy applications through developer-friendly interfaces without managing the underlying Kubernetes complexity. This approach enables organizations to leverage Kubernetes without dedicated platform engineering teams.

Qovery provides cost visibility integrated into deployment workflows. The platform identifies optimization opportunities and implements right-sizing recommendations. Organizations gain Kubernetes developer experience improvements while maintaining cost control.

Automation eliminates manual configuration errors and reduces operational burden. Teams focus on application development rather than infrastructure management, bringing velocity and productivity to engineering organizations as a whole.

The platform scales with organizational growth without proportional increases in operational overhead, accompanying mid-size companies into their development while keeping their costs lean to stay competitive and efficient.

Conclusion

Well-defined Kubernetes management approaches enable organizations to operate clusters efficiently, securely, and cost-effectively. Best practices across security, reliability, efficiency, and operations create foundations for successful Kubernetes adoption.

Strategic considerations around cluster design, scaling, governance, and automation align technical decisions with business requirements. The ecosystem of management tools provides capabilities for every operational need, from monitoring to cost optimization.

Effective Kubernetes cluster management and cost optimization directly impact organizational success. Platforms that simplify these complex processes enable teams to focus on delivering business value rather than managing infrastructure complexity.

Suggested articles

.webp)

.svg)

.svg)

.svg)