How to deploy a Docker container on Kubernetes: step-by-step guide

Key Points:

- Complexity of Manual Deployment: Deploying a simple Docker container manually to Kubernetes is surprisingly complex, requiring six distinct steps (from building the image to debugging) that demand expertise in multiple YAML manifests (Deployment, Service), networking concepts, and

kubectloperations. - The YAML/Tooling Friction: The core difficulty lies in generating and managing multiple YAML configuration files, correctly defining resource requests, handling registry authentication, and constantly switching context between local tools and cluster environments, significantly slowing development.

- The Automated Solution: Platform tools like Qovery act as an Internal Developer Platform (IDP) that abstracts this complexity, automating the YAML generation, networking, load balancing, and CI/CD pipelines. This allows developers to focus on application code while still retaining the benefits of Kubernetes orchestration.

Kubernetes is the standard for container orchestration, but getting a single Docker container running can be a surprisingly complex task, even for experienced teams. The process demands creating multiple YAML configuration files, mastering networking concepts, managing image registries, and troubleshooting across distributed systems.

What should be trivial often turns into a lengthy, friction-filled deployment process. This guide breaks down the full 6-step manual gauntlet, and then shows you the single-step automated approach that simplifies the workflow entirely.

Understanding Kubernetes Components

Before deploying, teams need to be familiar with the following Kubernetes primitives:

- Pods are the smallest deployable units, wrapping one or more containers with shared storage and network resources. A pod represents a single instance of a running process, though it can contain multiple tightly coupled containers working together.

- Deployments manage Pod replicas, handling updates and rollbacks automatically. When a deployment specification changes, Kubernetes creates new pods with the updated configuration and gradually terminates old ones. This rolling update strategy minimizes downtime during releases.

- Services expose pods to network traffic, providing connectivity even as pods are created and destroyed. Since pods receive dynamic networking addresses and can be replaced at any time, services offer a consistent DNS name and load balancing across healthy replicas.

- Ingress resources manage external access, routing traffic to the appropriate services based on hostnames or URL paths. Ingress controllers handle TLS termination, allowing teams to configure HTTPS access without modifying application code.

The Traditional 6-Step Manual Deployment Process

Deploying a Docker container to Kubernetes step by step requires multiple configuration files and command-line operations. Each step introduces potential failure points that demand specific knowledge to resolve. We’ll go over all the necessary steps to deploy a new container manually.

Step 1: Create and Build the Docker Image

The process begins with a working Dockerfile in the application repository. Building the image locally and verifying the container runs correctly before pushing to a cluster.

An example Dockerfile for a Node.js project can look as follows:

```

FROM node:20-alpine

WORKDIR /app

COPY package*.json ./

RUN npm ci --only=production

COPY . .

EXPOSE 8080USER node

CMD ["node", "server.js"]

```The Alpine base image provides a minimal footprint, while the non-root USER directive follows security best practices. Building the image locally verifies the container runs correctly before pushing to a cluster.

```

docker build -t myapp:v1.0.0 .

docker run -p 8080:8080 myapp:v1.0.0

```

Use semantic version tags rather than relying on the `latest` tag. The `latest` tag creates ambiguity about which version runs in production and complicates rollback procedures when issues arise. Teams adopting GitOps practices tag images with commit SHAs or release versions for complete traceability.

Step 2: Push the Image to a Container Registry

Kubernetes clusters pull images from container registries, which must be accessible from the cluster's network. Container registries can be used in various ways, using DockerHub, Google Container Registry, Amazon ECR, or a private registry.

```

docker tag myapp:v1.0.0 registry.example.com/myapp:v1.0.0

docker push registry.example.com/myapp:v1.0.0

```

Registry authentication adds another configuration layer. Teams must create Kubernetes Secrets containing registry credentials and reference them in deployment manifests using imagePullSecrets. Forgetting this step results in ImagePullBackOff errors that can be difficult to diagnose.

Security scanning requirements at this stage can block deployments if vulnerabilities are detected in base images or dependencies. Many organizations mandate vulnerability scanning in CI pipelines, adding another integration point that teams must configure and maintain.

Private registries require network connectivity from the cluster. Firewall rules, VPC peering, and DNS resolution all affect whether nodes can successfully pull images during deployment.

Step 3: Define the Deployment YAML

The Deployment manifest represents the core friction point in Kubernetes deployments. This YAML file specifies how Kubernetes should run and manage the application containers.

```

apiVersion: apps/v1

kind: Deployment

metadata:

name: myapp-deployment

labels:

app: myapp

spec:

replicas: 3

selector:

matchLabels:

app: myapp

template:

metadata:

labels:

app: myapp

spec:

containers:

- name: myapp

image: registry.example.com/myapp:v1.0.0

ports:

- containerPort: 8080

resources:

requests:

memory: "128Mi"

cpu: "250m"

limits:

memory: "256Mi"

cpu: "500m"

```

This configuration requires understanding API versions, label selectors, resource specifications, and the relationship between Deployments, ReplicaSets, and Pods. Missing or mismatched labels cause silent failures where Deployments create Pods that Services cannot discover.

Resource requests and limits require careful tuning. Requests determine scheduling decisions, while limits trigger throttling or termination when exceeded. Setting these values incorrectly leads to either wasted cluster capacity or application instability under load.

Production deployments typically include additional configuration for health checks, environment variables, volume mounts, and security contexts. Each addition increases manifest complexity and introduces more potential for misconfiguration.

Step 4: Define the Service YAML

A second YAML file exposes the Deployment to network traffic. Kubernetes offers three Service types, each with different networking implications.

```

apiVersion: v1

kind: Service

metadata:

name: myapp-service

spec:

selector:

app: myapp

ports:

- protocol: TCP

port: 80

targetPort: 8080

type: LoadBalancer

```

ClusterIP creates an internal-only endpoint accessible within the cluster. This type suits backend services that other applications consume but should not be exposed externally. NodePort exposes the Service on each node's IP at a static port.

LoadBalancer provisions an external load balancer in supported cloud environments. Teams often use Ingress controllers to consolidate external access through a single load balancer.

Choosing the wrong type means applications are either inaccessible externally or exposed incorrectly, as they require solid networking knowledge and careful configuration. Port mapping between the service port and container targetPort is another common source of connectivity issues that produce no obvious error messages.

Step 5: Apply Configuration with kubectl

With manifests written, use kubectl to apply them to the cluster. This requires a properly configured kubeconfig file pointing to the correct cluster context.

```

kubectl apply -f deployment.yaml

kubectl apply -f service.yaml

```

The dependency on local tooling creates additional setup overhead. Each developer needs kubectl installed and configured correctly, with credentials rotated periodically for security reasons. Version mismatches between kubectl and cluster API servers occasionally cause unexpected behavior.

Teams managing multiple clusters switch contexts frequently, increasing the risk of deploying to unintended environments. Namespace confusion adds another dimension, as resources applied to the wrong namespace may go unnoticed until runtime errors occur.

Step 6: Verify and Debug

Deployment success requires manual verification. Pods may fail to start for numerous reasons that only appear in logs or event streams.

```

kubectl get pods

kubectl describe pod myapp-deployment-xxxxx

kubectl logs myapp-deployment-xxxxx

```

Common failure modes include image pull errors from authentication problems, container crashes from missing environment variables, and readiness probe failures from misconfigured health checks. Each requires different debugging approaches and Kubernetes-specific knowledge to resolve.

CrashLoopBackOff status indicates the container starts and fails repeatedly. Diagnosing the root cause requires examining logs from previous container instances. Teams without centralized logging may lose visibility into crash causes.

Network policies, if enabled, can silently block traffic between Pods. Debugging connectivity issues requires understanding the cluster's CNI plugin and any applied network restrictions.

The Automated Solution: Deploying with Qovery

The manual process demonstrates why teams seek ways to simplify Kubernetes deployment. Platform tools like Qovery abstract the underlying complexity while maintaining the benefits of Kubernetes orchestration.

The 1-Step Qovery Approach

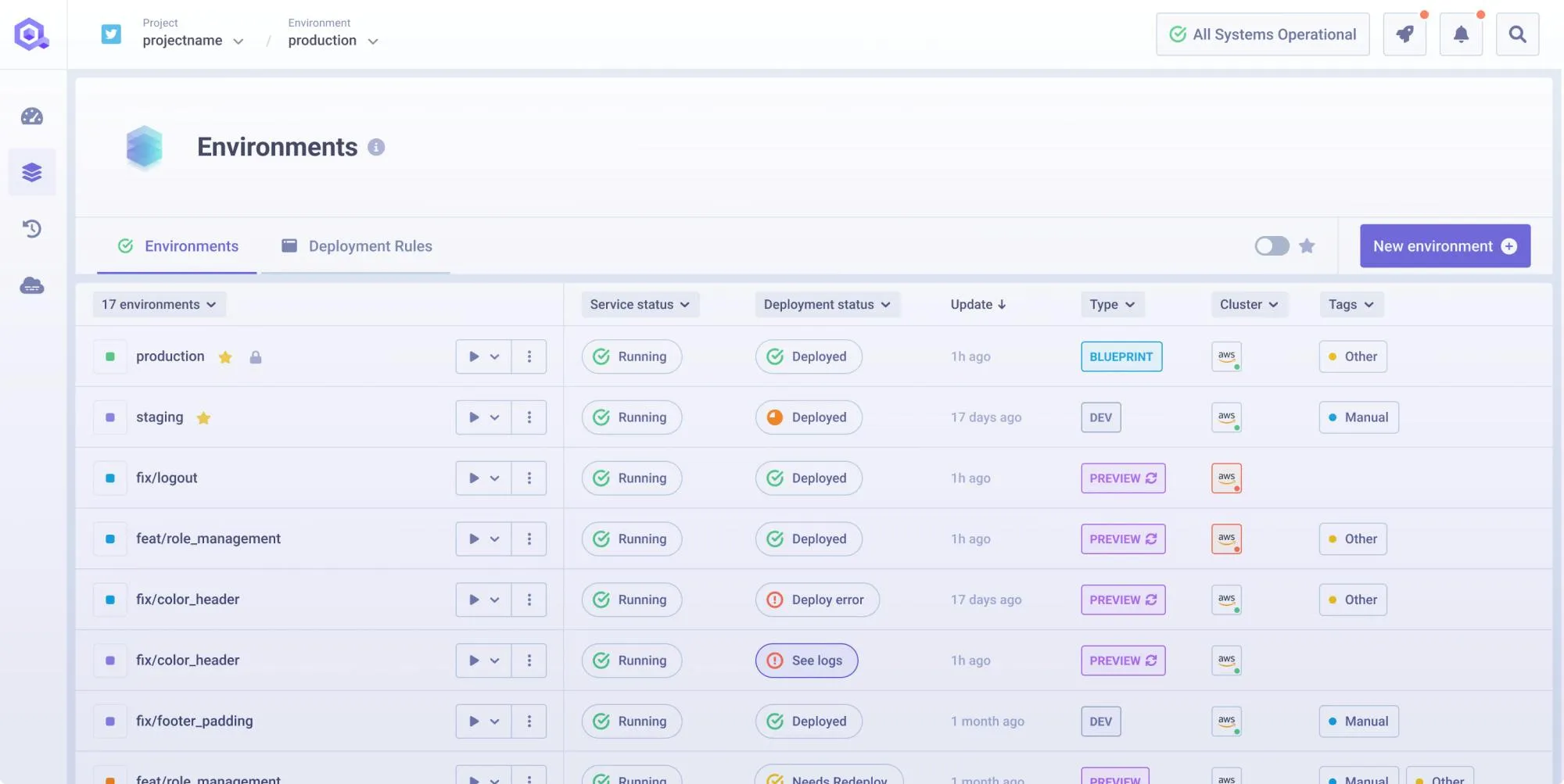

Qovery is an Internal Developer Platform that handles YAML generation, networking configuration, and deployment automation. The workflow simplifies to connecting a Git repository and selecting the application. It abstracts the complexities of Kubernetes deployment while letting organizations benefit from its features.

To deploy new applications, teams connect their GitHub, GitLab, or Bitbucket repository to Qovery. Engineers can then configure which Dockerfile/application they want to deploy, the platform configures the build process automatically.

Qovery generates the necessary Kubernetes resources, configures Ingress routing with TLS certificates, and provisions load balancers automatically. It also manages the deployment automatically, ensuring proper monitoring of the created resources and application health. Developers work with application code while it manages infrastructure concerns.

How This Addresses Manual Pain Points

The YAML configuration from Steps 3 and 4 disappears entirely. Qovery generates deployment manifests based on application requirements detected from the repository. Teams specify resource needs through a web interface rather than editing YAML files directly.

Automated CI/CD replaces the manual image push and kubectl apply workflow from Steps 2 and 5. Git push triggers the complete pipeline from build through deployment. Rollbacks execute through the interface rather than requiring kubectl commands and manifest version tracking.

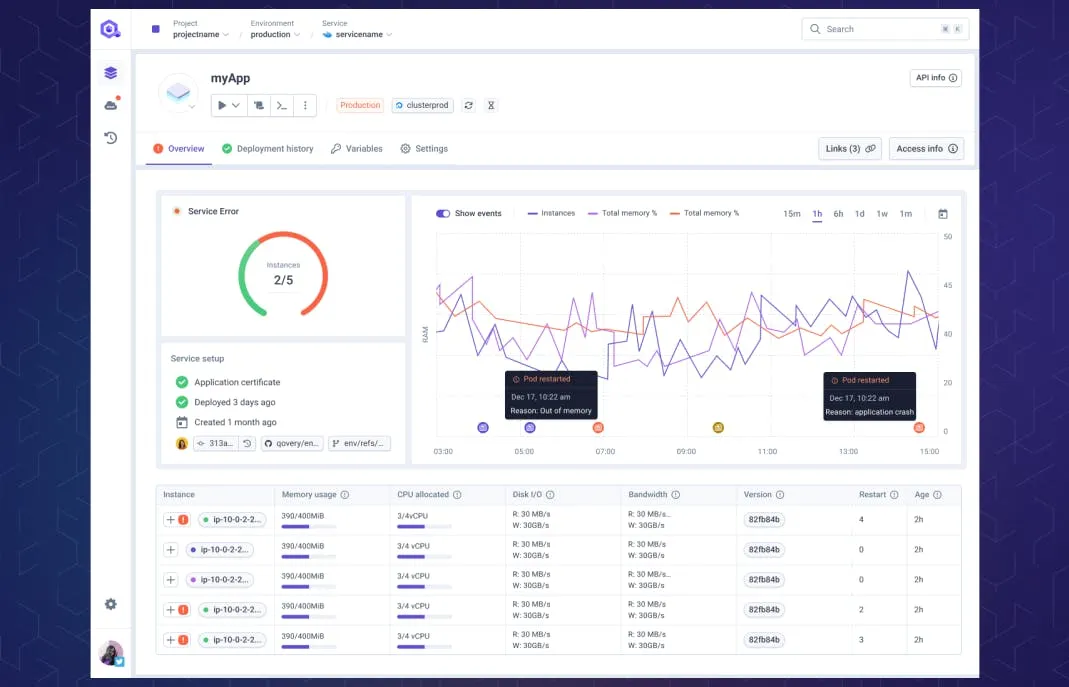

Built-in observability simplifies the debugging process from Step 6. Logs stream directly in the dashboard without requiring kubectl access or cluster credentials. Deployment status and resource metrics appear without additional monitoring configuration or third-party tooling setup.

Qovery can also create Ephemeral Environments for every pull request. These temporary, isolated copies of the full application stack enable testing changes before merging and releasing to production. This feature ensures quality and thorough validation before any change reaches end customers.

Conclusion

Understanding how to deploy a Docker container in Kubernetes provides valuable knowledge about container orchestration fundamentals. The manual 6-step process reveals the important configuration decisions that production systems require to run correctly.

For teams shipping features regularly, automation removes friction without sacrificing control. The complexity of YAML manifests, kubectl operations, and debugging distributed systems slows development velocity when handled manually for every deployment.

Qovery and similar DevOps automation tools offer a path between raw Kubernetes complexity and fully managed platforms. Teams retain Kubernetes benefits while focusing on application development rather than infrastructure configuration.

Suggested articles

.webp)

.svg)

.svg)

.svg)