The importance of SemVer for your applications

What is SemVer?

First of all, let's remind us what SemVer is: SemVer (for Semantic Versioning) is the process of assigning either "uniqueversion' names or "uniqueVersion" numbers to unique states of computer software. Within a given version number category (e.g., major, minor), these numbers are generally assigned in increasing order and correspond to new developments in the software. [Wikipedia]

1st issue: Patch version

To get a bit of context, we are using EKS (Kubernetes on AWS) for Qovery production, and we wanted to remove the SSH access (remote) from our Kubernetes nodes.

Config change

On Terraform, it was just a few lines to remove:

The applying workflow for those changes was:

- Force the deployment of new EKS nodes (EC2 instances behind it)

- Move pods (a set of containers) from old nodes to fresh new nodes

- Delete old nodes

Before running the changes, we checked that every pod on the cluster was running smoothly; there was no issue. So we decided to apply the change as everything looked OK and the operation "as usual".

Outage

The rollout occurred, and all pods moved from the old nodes to the new ones. Pods were able to start without problems...instead of one! Our Qovery Engine (written in Rust), is dedicated to infrastructure deployments.

The pod was crashing 2s after starting during the initialization phase and was in CrashloopBackOff status:

We've got an unwrap() here (non caught error) on a Result. So this issue was voluntary, not handled, or definitively not expected.

Investigation

Looking deeper into our Cargo dependencies (Cargo.toml), we did not use this "procfs" library directly. Meaning it's a library dependency, so we dug into the Cargo.lock to find the dependency and bingo! Prometheus library was using it:

Alright, we see the procfs version is 0.9.1 as described in the error message. So looking at the code in src/lib.rs line 303 we got this:

We can see inside current() that it reads a file (the kernel version):

And the function in charge of this is splitting on dot and ASCII digits:

Here comes the fun fact! Let's take a look at the kernel version on AWS EC2 instances deployed by EKS:

And we expected to have this struct:

So here is the problem in the kernel number (4.14.256-197.484.amzn2.x86_64).

256: we expected to have a patch in u8, whereas the max of a u8 is 255, so we needed at least a u16. (https://github.com/eminence/procfs/pull/140).

It's not common to have such a high number, but not rare. We can see it on Chrome, vanilla Kernel, and many other big projects. After the patch version corresponds to a build change, it's also common to find the "-xxx" version.

Unfortunately, this library wasn't expecting to have numbers higher than 255, and SemVer discussion about this topic doesn't look to be simple: https://github.com/semver/semver/issues/304.

Solutions and fix

At this moment, we had 3 solutions in mind:

- Temporarily remove Prometheus lib

- Fix by ourselves those issues report them upstream. It can take some time, depending on the number of changes and impact behind it (primarily for lib retro-compatibility)

- Look into commits, and try to move to newer versions to see if those issues were fixed

Temporarily remove Prometheus lib

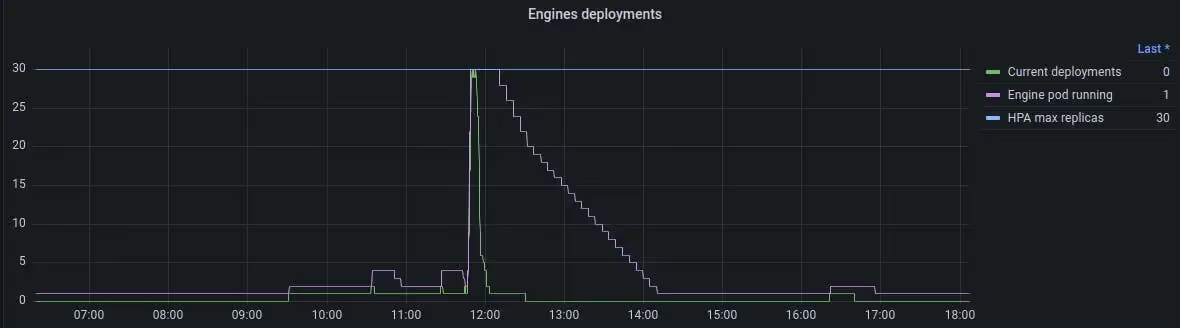

We decided not to remove Prometheus lib, because at Qovery, it's not only used for observability purposes but also to manage the Kubernetes Horizontal Pod Autoscaler (HPA). It helps us to absorb a load of infrastructure changes automatically and scale accordingly to the number of Engines for deployment requests:

Fix by ourselves those issues

We wanted to go fast. Making a fork, patching libs, and later on, making a PR to the official project repository sounds a good solution, but it would take time. So before investing this time, we needed to be sure that newer commits of profs and Prometheus were not embedding fixes.

Move to newer versions

Finally, "procfs" in the latest released version had the bug fixed! Prometheus lib was not up to date with this latest release of procfs version, but the main branch was up to date.

So thanks to Rust and Cargo, it's effortless to point to git commit. We updated the Cargo.toml file like this:

And finally, we were able to quickly release and deploy a newer Engine version with those fixes.

2nd issue: AWS Elasticache and Terraform

This one was a pain for a lot of companies. AWS decided to change the version format of their Elasticache service. Before version 6, SemVer was present:

- 5.0.0

- 4.0.10

- 3.2.10

When version 6 was released, they decided to use 6.x version format! (https://web.archive.org/web/20210819001915/https://docs.aws.amazon.com/AmazonElastiCache/latest/red-ug/SelectEngine.html)

As you can imagine comparing 2 versions with 2 different types (int vs. string), would fail on a lot of software like Terraform. You can easily find all related issues due to this change on the "Terraform AWS provider" GitHub issue page: https://github.com/hashicorp/terraform-provider-aws/issues?q=elasticache+6.x

At this time, it was a headache for people using Terraform (like Qovery) who wanted to upgrade from a previous version and "Terraform AWS provider" developers. AWS understood their mistake as they recently removed 6.x and switched back to SemVer format (https://docs.aws.amazon.com/AmazonElastiCache/latest/red-ug/SelectEngine.html). And, so...here we go again on the "Terraform AWS provider" on GitHub: https://github.com/hashicorp/terraform-provider-aws/issues/22385

Conclusion

Big corporations like AWS or small libraries maintained by a few developers can easily have a considerable impact when SemVer rules are not strictly respected with the correct type. Versioning your applications is not "cosmetic" at all, as you can see.

Following some rules and good practices is essential if we make reliable software in our tech industry. SemVer is definitively one of them!

Suggested articles

.webp)

.svg)

.svg)

.svg)