Deploy to EKS, AKS, or GKE without writing a single line of YAML

Key points:

- The False Trade-off: Engineering teams historically had to choose between Heroku and Kubernetes.

- The Convergence: Modern platforms like Qovery break this cycle by offering a "Heroku-like" developer interface that sits on top of your own cloud account (AWS/GCP/Azure).

- Zero YAML, Full Control: Developers deploy via Git push without touching manifests, while platform engineers retain full access to the underlying EKS/AKS/GKE clusters for compliance and optimization.

For years, engineering teams have been stuck in a dilemma. You could choose Simplicity (Heroku), accepting vendor lock-in and skyrocketing costs. Or you could choose control (Kubernetes), accepting months of configuration headaches and the "YAML tax" on your developers.

Startups picked simplicity to move fast. Enterprises picked control to stay compliant. Both compromises are now obsolete.

You no longer need to sacrifice developer experience to run on your own infrastructure. Modern unified platforms deliver the "push-to-deploy" simplicity of a PaaS while running directly on managed Kubernetes services in your own cloud account.

In this guide, we explore how to break the trade-off: giving developers a zero-configuration interface while giving platform engineers the full power of AWS, Azure, and Google Cloud.

The Simplicity of the Past: The Heroku Benchmark

Heroku set the standard for developer experience in cloud deployment. The workflow is elegant: connect a Git repository, push code, and watch the application appear online. No servers to configure, no infrastructure to provision, no deployment scripts to maintain.

Why Developers Loved It

The appeal was immediate productivity, as developers could go from code to an application running in production within minutes. The platform handled building containers, provisioning resources, managing SSL certificates, and scaling based on demand.

This abstraction let developers focus entirely on application code. Infrastructure concerns disappeared behind a simple interface. New team members deployed their first changes on day one rather than spending weeks learning deployment procedures.

The platform detected application frameworks automatically and configured appropriate runtime environments. Developers specified what they wanted to run, not how to run it, and the environment was built for them automatically.

The Limits of Heroku

As applications grow, the constraints of traditional PaaS solutions like Heroku often shift from conveniences to operational roadblocks, prompting teams to search for alternatives.

- Scaling Costs & Pricing Models: Pricing scales poorly for compute-intensive workloads.

- Compute: A single dyno running continuously often costs significantly more than equivalent compute instances on major cloud providers.

- Data: Database costs at scale can exceed self-managed alternatives or native cloud DBs by wide margins.

- Vendor Lock-in & Strategic Risk: Applications built on Heroku often depend on proprietary features and infrastructure patterns.

- Migration Difficulty: Moving away usually requires significant re-architecture rather than a simple "lift and shift."

- The Trap: Engineering organizations often feel trapped as their application grows; costs increase, but leaving becomes technically expensive.

- Lack of Customization: Growing organizations often hit a "glass ceiling" where specific needs cannot be met.

- Networking & Security: Custom networking configurations, specific security controls, or strict compliance requirements may be impossible to implement.

- Geography: Teams needing data residency in specific regions outside of Heroku's support list find themselves unable to meet legal requirements.

The Power of the Present: The Kubernetes Reality

Managed Kubernetes services from major cloud providers offer what Heroku couldn't: power, flexibility, and cost efficiency at scale.

The Benefits of EKS, GKE, and AKS

Managed services like Amazon EKS, Google GKE, and Azure AKS provide enterprise-grade container orchestration while removing the heavy lifting associated with self-managed clusters.

Here are the key benefits:

- Reduced Operational Burden: Teams avoid the complex task of managing Kubernetes control planes. The cloud provider handles automatic upgrades, integrated security patching, and native cloud integrations, allowing engineers to focus on the application rather than the cluster internals.

- Seamless Scalability: Scalability becomes straightforward and automated. Kubernetes can handle horizontal scaling, load balancing, and resource scheduling without manual intervention.

- Result: Applications can grow from handling hundreds of requests to millions without requiring fundamental architectural changes.

- Superior Cost Efficiency: At scale, managed Kubernetes is often more economical than PaaS alternatives.

- Optimization: Teams can utilize reserved instances, spot pricing, and efficient cluster autoscaling to drive down compute costs.

- Billing: You pay for actual resource consumption rather than being locked into arbitrary, pre-set pricing tiers.

- Limitless Customization & Compliance: There are no practical limits on configuration. Any container can run on Kubernetes, and any networking topology is possible. This allows for precise implementation of strict compliance requirements regarding data residency, encryption, and access control.

- Prevention of Vendor Lock-in: Kubernetes runs identically across all major cloud providers. Because it relies on open container and orchestration standards, applications can be ported to any other platform without code changes, ensuring true portability.

The Complexity Barrier

While Kubernetes offers significant benefits, they come with substantial complexity costs. Production-ready Kubernetes requires a level of expertise that most development teams lack and, ideally, should not need to acquire.

Here is a breakdown of the specific barriers teams face:

- YAML Configuration Overload: YAML files define every aspect of a deployment. Even a simple web application requires a multitude of distinct files, including Deployment manifests, Service definitions, Ingress configurations, ConfigMaps (for environment variables), and Secrets (for credentials). Each file demands precise syntax and a deep understanding of Kubernetes concepts.

- Production-Grade Requirements: Basic manifests are just the beginning. Real-world production applications require additional YAML configurations for horizontal pod autoscalers, network policies, and service meshes. All of these must be created, managed, and versioned for each microservice across every environment.

- CI/CD Pipeline Complexity: Building containers, pushing to registries, updating manifests, and triggering deployments require an entire ecosystem of tooling. Teams must configure and maintain complex pipelines using tools like Jenkins, GitHub Actions, or ArgoCD, each requiring specific domain expertise.

- Infrastructure as Code (IaC) BurdenTools like Terraform or Pulumi are needed to provision the Kubernetes clusters, networking, databases, and supporting services. This creates yet another layer of resources that must be maintained to ensure a clear overview of the provisioned infrastructure.

The Human Cost

Ultimately, this results in two major inefficiencies:

- Developer Distraction: Developers are forced to learn infrastructure intricacies instead of focusing on shipping application features.

- Operational Overhead: Engineering organizations are required to build and fund dedicated platform teams just to maintain the infrastructure.

The power of Kubernetes comes at the direct cost of the simplicity that originally made platforms like Heroku so productive.

Qovery: The Convergence Layer

Qovery bridges this gap by providing a PaaS-like experience on top of managed Kubernetes services. Developers get the workflow similar to the one provided by Heroku. Platform engineers get the control they need from Kubernetes, while no one has to write YAML to get their applications deployed.

How It Works

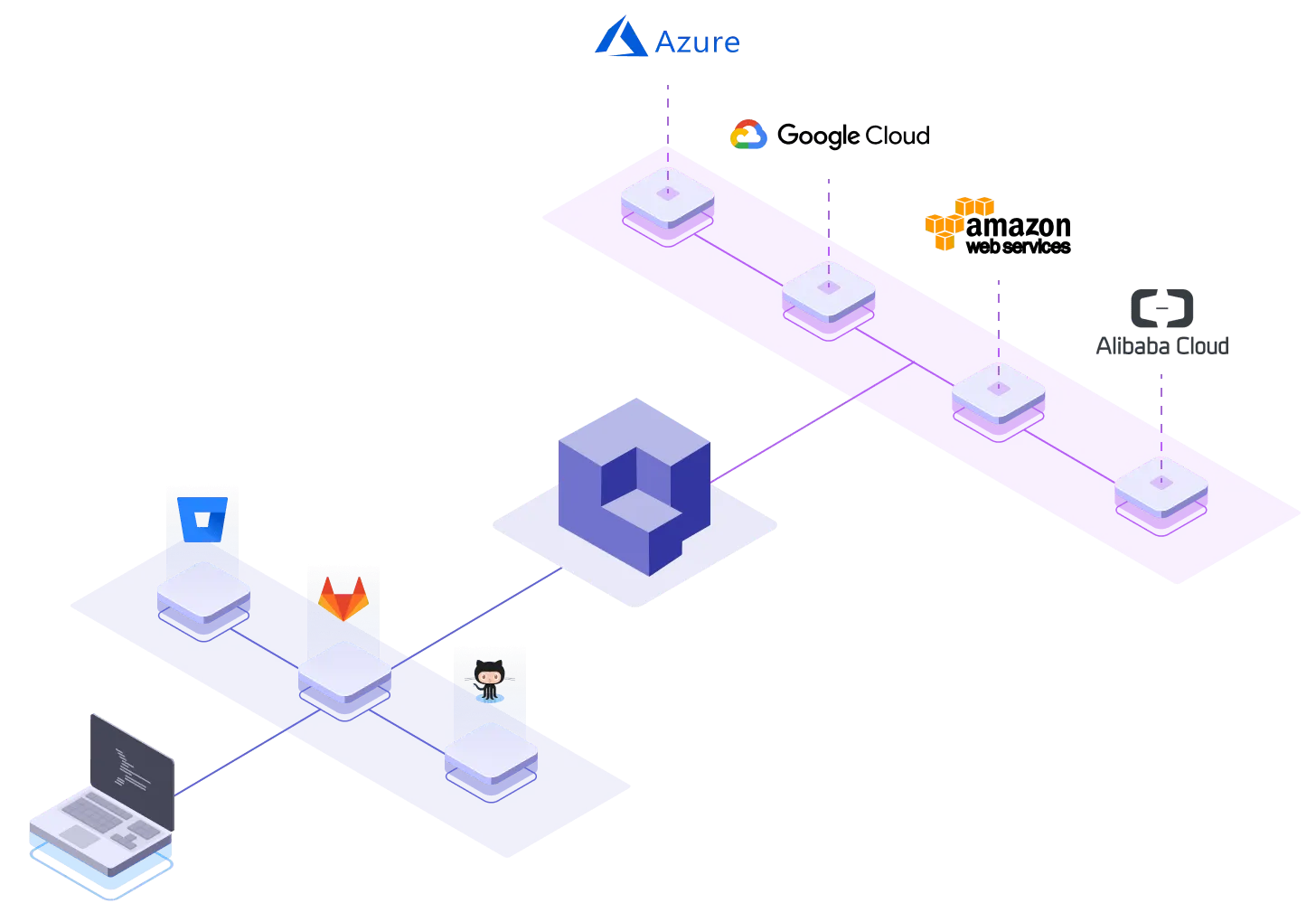

Qovery acts as an intelligent abstraction layer, connecting directly to your cloud account to provision managed Kubernetes clusters on EKS (AWS), AKS (Azure), or GKE (Google Cloud). It provides a simplified interface for deploying applications, effectively bridging the gap between complexity and usability.

Here is the step-by-step workflow:

- Seamless Cloud Integration: Qovery connects to your existing cloud provider and automatically provisions and manages the underlying Kubernetes clusters. You get the power of standard managed Kubernetes without the manual setup.

- Developer-Centric Interaction: Developers interact with the platform using tools they already know:

- Git Integration: Pushing code triggers automatic builds and deployments.

- CLI & Web UI: Manage settings and observe deployments easily.

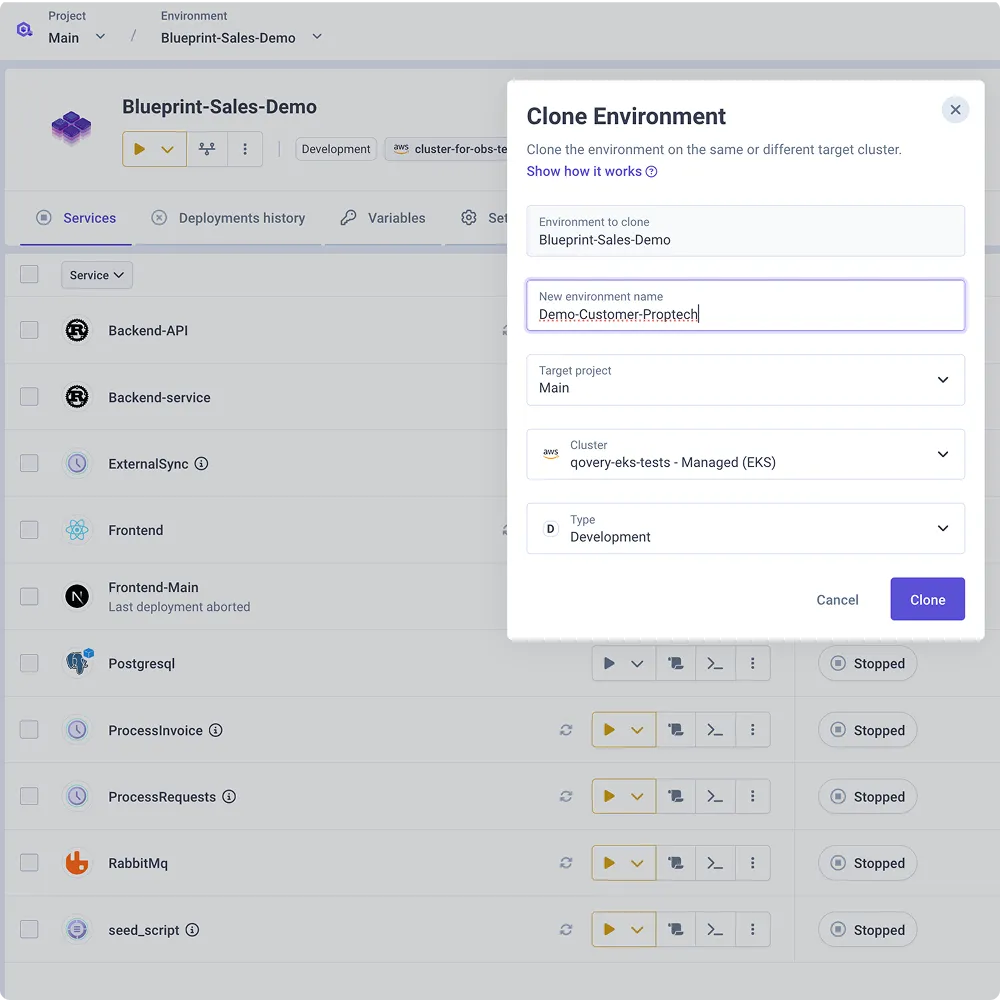

- Environment Management: Create new environments (e.g., staging, dev) via simple UI selections rather than complex infrastructure provisioning.

- Automated "Heavy Lifting": Behind the scenes, the platform handles the tasks that typically consume a platform team's time:

- Manifest Generation: Automatically creates all necessary Kubernetes manifests.

- Pipeline Configuration: Sets up and maintains CI/CD pipelines.

- IaC Management: Manages the Infrastructure as Code to ensure consistency and reliability.

Key Takeaway: Qovery handles the complexity of Kubernetes, allowing your team to deploy like experts without requiring dedicated platform engineering effort.

Zero YAML Required

The core promise is to let engineers deploy applications to production Kubernetes without writing configuration files.

Qovery builds and generates appropriate container configurations automatically. Environment variables are configured through the web interface, which are then converted to ConfigMaps and Secrets. Resource allocations are set through simple controls rather than YAML resource blocks.

Databases, caches, and message queues provision through the same interface. Select PostgreSQL, specify size, and Qovery handles the underlying infrastructure, without any Terraform needed.

This abstraction doesn't prevent customization when operating teams require them. Teams requiring specific configurations can override defaults. But the common case requires no configuration at all.

The Core Value Proposition: Abstraction and Control

Qovery delivers value to both developers seeking simplicity and platform engineers requiring control.

Developer Workflow

The developer experience mirrors what made Heroku a great choice for productivity. Connect a repository, select a branch, and deploy. The platform handles building, testing, and releasing without manual intervention.

Git push triggers deployment automatically, keeping developers in their normal workflow. Qovery detects updates and rolls out new versions. No separate deployment step interrupts the development flow.

Ephemeral environments accelerate testing and review, as each pull request receives an isolated, full-stack environment automatically. Reviewers test actual running applications rather than reviewing code in isolation. Environments clean up automatically when pull requests close, keeping resource usage lean.

Platform Engineer Control

Unlike traditional PaaS platforms, Qovery runs entirely within your cloud account. EKS clusters provision in your AWS account. GKE clusters run in your GCP project. AKS clusters deploy to your Azure subscription.

Engineering organizations preserve full control over the deployed infrastructure, as operators can access underlying Kubernetes clusters directly. Custom networking, security policies, and compliance controls are implemented through standard cloud provider tools. Data stays within organizational boundaries, as no application traffic routes through third-party infrastructure.

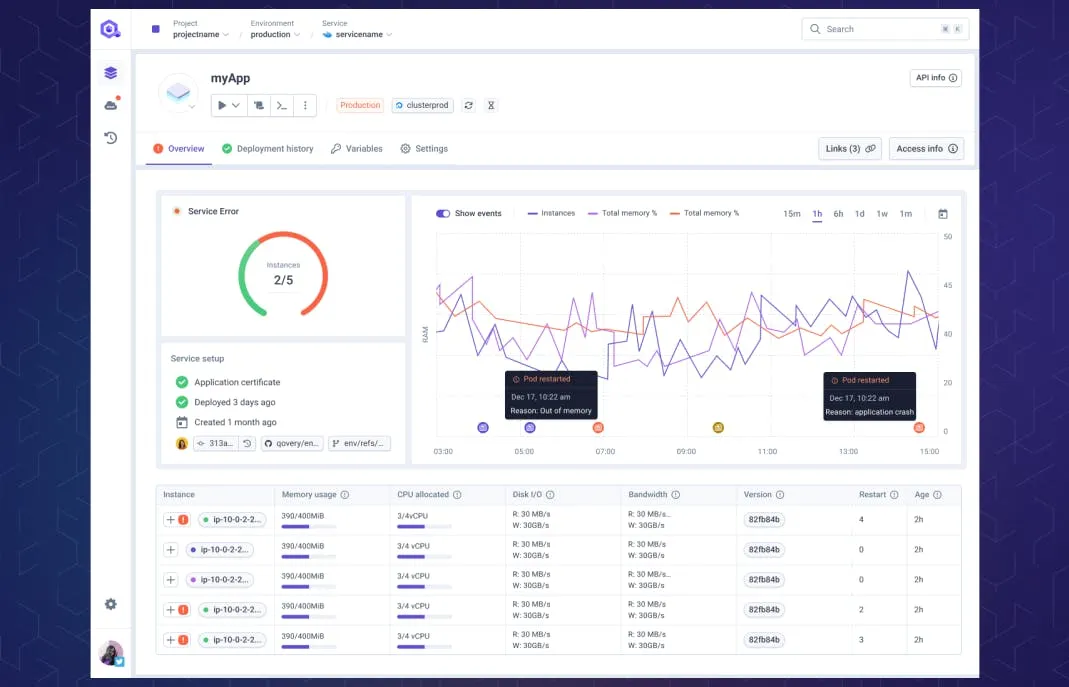

Full DevOps observability is provided out of the box with this solution. Logs and metrics are visible within the web interface and can be used with third-party observability tools natively.

The Best of Both Worlds

This combination addresses the historic trade-off directly. Developers get the simplicity they need to ship quickly, while platform engineers get the control they need. The organization gets the cost efficiency and flexibility of Kubernetes without the complexity tax.

Conclusion

The choice between deployment simplicity and infrastructure control was never a real requirement. It was a limitation of available tooling that forced organizations to make compromises.

Qovery demonstrates that modern platforms can deliver both. The developer experience rivals Heroku at its best: Git push deployment, instant environments, and zero configuration for common cases. The infrastructure foundation provides everything Kubernetes offers: scalability, customization, cost efficiency, and portability.

Qovery provisions and manages infrastructure in your account, generating the YAML, Terraform, and CI/CD configurations that would otherwise require platform engineering effort. Developers never see this complexity, and operating engineers retain full access when required.

Suggested articles

.webp)

.svg)

.svg)

.svg)