Kubernetes Multi-Cluster: Why and When To Use Them

Let’s start with what multi-cluster Kubernetes is.

What is Multi-Cluster Kubernetes

In multi-cluster Kubernetes, you have more than one cluster for your application. These clusters can be the replica of each other, and you can deploy multiple copies of your application across these clusters. To achieve high availability, each cluster is placed on a separate host and in a separate data center. That makes sure that any infrastructure loss or cluster breakdown does not impact other clusters in the solution. Although we can have multiple clusters on the same host and in the same data center to save some cost, it will deprive us of the true benefits of high availability.

Multi-Cluster Kubernetes Architecture

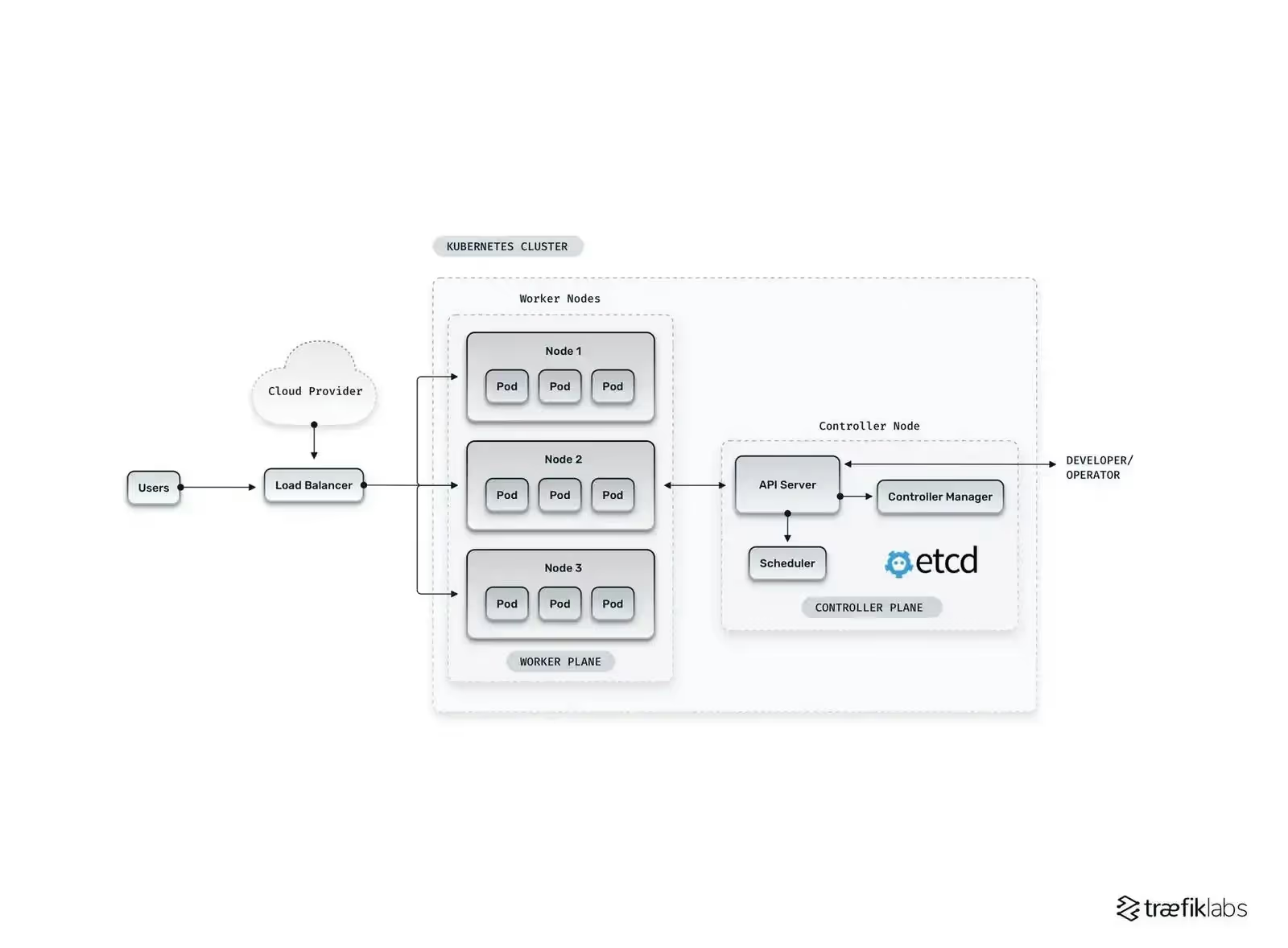

Let’s compare the architecture of a single cluster and a multi-cluster. Here is what a single cluster looks like:

As you can see, all the traffic is handled by one cluster. There is only one load balancer that is provided by the Cloud provider. Let’s see the architecture of the multi-cluster below:

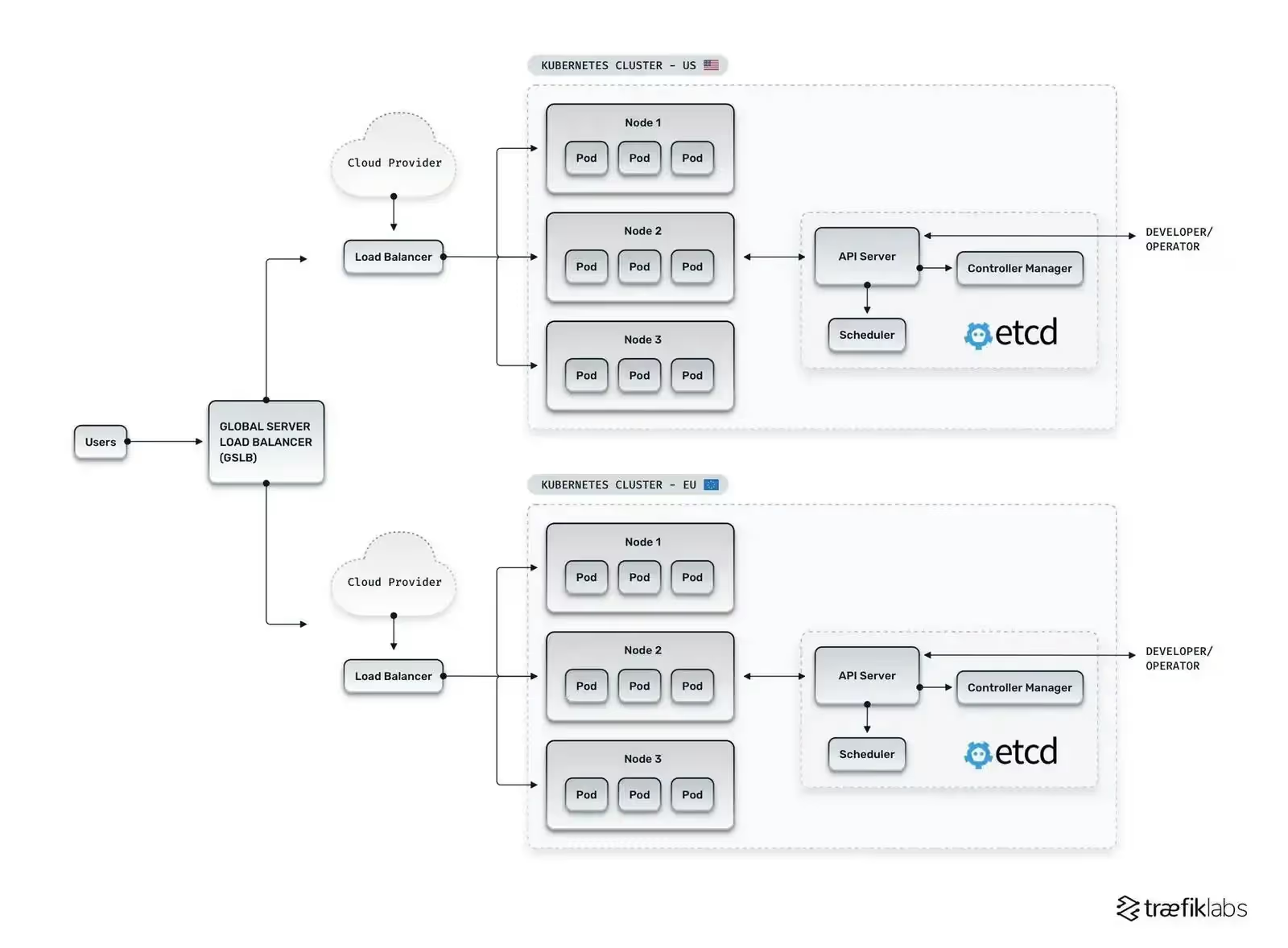

There are a few things you can notice in the above architecture diagram. Both the clusters are in separate geographical areas and different data centers. That ensures high availability. The components structure is the same for both clusters, i.e., three worker nodes and one controller node. The global load balancer has the capability to divide the load across different regions. It is also to be noted that you can meet any regulatory or compliance needs if a particular customer wants a cluster hosted on a dedicated host so that the underlying infrastructure is not shared with other customers. The same is the case with the placement of clusters in the same region as the client for regulatory compliance.

Why Use Multi-Cluster Kubernetes

Multi-cluster Kubernetes provide loads of powerful features such as:

Flexibility

Multi-cluster Kubernetes provides you with much flexibility regarding how you use the clusters to your business advantage. One example is the use of one cluster per environment. You can have one cluster for production, one cluster for staging, and one cluster for your QA. For staging and QA, you can use less expensive infrastructure to save costs. You can place the production cluster closer to your customers while QA and staging can be near to development teams.

Similarly, you can reserve one of the clusters for testing new ideas and complex technical R&D-related features. One small cluster can be dedicated to testing new versions of Kubernetes in isolation so that it does not break any changes when it is upgraded to production.

You now have better control over the design and configuration of the Kubernetes setup when you have more than one cluster at hand.

Availability, Scalability, and Resource Utilization

With multi-cluster, you can replicate your application across different data centers in different regions, increasing availability.

Multi-cluster also helps with scalability. The load balancer will send traffic to a particular cluster based on the URL or type of request, and in case the cluster is getting too many hits, the cluster will be scaled out to handle the load. That helps you meet your diverse performance needs by intelligent utilization of your resources.

Workload Isolation

While you can isolate the workload in a single cluster using namespaces, that isolation is weak because of the shared hardware and peculiarities of the Kubernetes security system. Multi-cluster provides the perfect solution to this problem by introducing a high isolation level. If one cluster breaks, the blast radius is small, i.e., the impact is only limited to the workload that runs on that cluster, and other clusters are not affected. A potential cluster failure or any configuration change affects only one cluster. This isolation becomes even more critical if you are developing microservices-based architecture and want to deploy some services in one cluster and the rest in others. To get the most out of microservices architecture, multi-cluster Kubernetes are very well suited.

Security and Compliance

Managing security and user-based policies is extremely difficult in a single-cluster environment. Using one cluster per application component (front end, backend, etc.) or one cluster per deployment environment allows you to have tighter security checks per cluster basis.

Multi-cluster also allows you to meet regulatory and compliance needs. One example is GDPR, where the data of European customers must physically reside inside the EU region. You can have one cluster for EU users inside the EU region, while for other global customers, you can have one cluster in a global region. Another example is when a customer needs to be on dedicated hardware with complete physical isolation of the infrastructure and data. With a multi-cluster solution, you can easily target specific clusters to meet different compliance needs.

Challenges in Multi-cluster Kubernetes

Setup and management of multi-cluster Kubernetes are not easy. Find below some of the challenges related to multi-cluster:

Complex Configuration

Setting up Kubernetes is complex, even for a single cluster. And for multi-cluster, this complexity is increased. You have to set up the proper configuration of all the clusters separately. Contrary to a single cluster setup where there is only one API server, multiple API servers exist in the case of multi-cluster. You must set up and manage access to these API servers for all the clusters.

Inter-cluster communication is another area that needs to be handled in the case of multi-cluster Kubernetes. You have to manage your clusters’ IP, routing rules, and DNS settings very carefully.

Security

Multi-cluster environment exposes more surface area for threats and attacks. You have to take care of multiple security certificates across all clusters. This requires a proper multi-cluster certificate management system across all clusters in all data centers.

Managing access rules for different users for each cluster is also a time-consuming and challenging task. This is a lot of work for security and admin personnel.

Securing API calls to each cluster layer needs to be handled as well. If the new cluster is provisioned due to load balancing needs, proper user access rights must also be set up simultaneously.

Cost

More clusters mean more nodes (hosts) which adds to your infrastructure cost. To reap all the benefits of a multi-cluster environment, including high availability and fault tolerance, you have to set up clusters in different data centers on their own set of infrastructure. This increased your cost.

The multi-cluster solution also increases many components implicitly part of the multi-cluster setup. Examples include load balancers, monitoring, and logging resources. This requires more operational and admin overhead.

Single-Cluster vs. Multi-Cluster: How to decide

You should go for a single-cluster if:

- You prefer cost saving over high availability and fault tolerance

- You are short on skilled DevOps resources to manage multi-cluster

- There is no compliance or regulatory requirement to use separate infrastructure for a particular client

You should go for multi-cluster if:

- Your application requires a high degree of high availability, and you want zero downtime for your application

- Your team has enough technical expertise to manage complex setup and maintenance of multi-cluster

- You have compliance or regulatory need to place your clusters in a specific geographical region

Wrapping up

In this article, we discussed multi-cluster Kubernetes in detail. We went through various benefits of multi-cluster and compared different use cases for both single cluster and multi-cluster. If you want to set up and manage multi-cluster but are hesitant because of its complexity, a modern solution like Qovery has the answer to this problem. Whether you want to use multi-cluster for achieving application resilience, compliance needs, or keep the cluster closer to the user’s location, Qovery provides many ways to simplify the multi-cluster Kubernetes. You can provision as many clusters as you want with just a few lines of code. Qovery will handle the technical complexity of the multi-cluster Kubernetes. At the same time, you can focus on your application’s success.

If you want to learn how hundreds of organizations leverage Qovery for multi-cluster Kubernetes management, you can watch our webinar with Pierre Olive, CTO of Spayr, a growing fintech company (also summarized in this article).

Suggested articles

.webp)

.svg)

.svg)

.svg)