The complete guide to migrating from EKS to ECS

Key points:

- The Complexity Trap: While EKS offers unmatched control and ecosystem support, "Day 2 operations" often create an unsustainable operational burden for smaller teams.

- The Hidden Cost of "Downgrading": Moving to ECS simplifies management but introduces strategic risks, including AWS vendor lock-in, the loss of the Helm/GitOps ecosystem, and non-transferable engineering skills.

- The Middle Path with Qovery: Migration isn't the only solution; abstraction is. Qovery allows teams to keep their EKS infrastructure while automating the "toil," providing the developer experience of ECS without the infrastructure limitations.

Many teams adopt EKS expecting a managed control plane to solve their orchestration headaches. What they get instead is a "Day 2" nightmare.

Between planning version upgrades, wrestling with CNI plugins, and constant IAM adjustments, the operational burden quickly eclipses the benefits of Kubernetes. It’s no wonder moving "back" to ECS is becoming a standard move for teams drowning in complexity. But is migrating to a proprietary AWS service a step forward, or just a different kind of debt?

This guide breaks down the technical reality of an EKS to ECS migration and explores a third path that preserves your Kubernetes power without the maintenance tax.

EKS vs. ECS: The Trade-off Analysis

While both services run containers on AWS, the similarities mostly end there. Understanding the fundamental differences clarifies what migration actually means.

1. Control vs. Convenience

EKS provides Kubernetes, an open standard with extensive ecosystem support. The Cloud Native Computing Foundation maintains the project, allowing for the tooling from the broader community integrates without vendor dependency.

ECS provides AWS-native container orchestration, with deep integration within AWS services that simplifies common patterns. The learning curve here is gentler, with a lower operational burden as AWS handles more of the infrastructure.

The trade-off here is control for convenience. EKS lets teams customize nearly everything but requires them to manage those customizations, while ECS removes customization options but eliminates the associated management burden.

2. The Cost of Simplicity

Moving to ECS means accepting specific limitations that may be impactful as applications evolve.

Vendor lock-in becomes a concrete issue. ECS Task Definitions use AWS-specific JSON schemas and migrating away means rewriting deployment configurations entirely. Skills developed on ECS cannot be transferred outside of AWS.

The Helm ecosystem disappears, as thousands of pre-packaged applications available as Helm charts have no ECS equivalent. Teams must build integrations that Kubernetes users install with single commands.

GitOps tooling loses compatibility. ArgoCD, Flux, and similar tools target Kubernetes natively. ECS deployments require different automation approaches, typically AWS-specific tooling like CodePipeline or custom scripts.

Networking flexibility decreases. Kubernetes network policies, service meshes, and custom CNI plugins enable patterns that ECS cannot replicate. Teams with sophisticated networking requirements may find ECS limiting.

Scheduling flexibility also reduces. Kubernetes offers fine-grained control over pod placement through node selectors, affinities, and taints. ECS placement strategies exist but provide fewer options for complex scheduling requirements.

The Migration Guide: How to Move from EKS to ECS

For teams committed to migration, the process involves translating Kubernetes concepts to ECS equivalents across several dimensions.

1. Refactoring Manifests

Kubernetes Deployments need to be migrated to ECS Task Definitions and Services.

A Kubernetes Deployment specifies container images, resource requests, environment variables, and replica counts in YAML. An ECS Task Definition captures similar information in JSON format with different structure and terminology.

```

{

"family": "web-app",

"containerDefinitions": [

{ "name": "web-app",

"image": "registry.example.com/web-app:v1.2.3",

"memory": 256,

"cpu": 256,

"portMappings": [

{

"containerPort": 8080,

"protocol": "tcp"

}

],

"environment": [

{

"name": "DATABASE_URL",

"value": "postgres://..."

}

]

}

]

}

```Each Kubernetes manifest requires manual conversion. Teams with dozens of services face significant translation work. ConfigMaps become parameter store entries or embedded environment variables. Secrets migrate to AWS Secrets Manager with corresponding task definition references.

Health checks require attention during conversion. Kubernetes liveness and readiness probes translate to ECS health check configurations, but the syntax and behavior differ. Teams should verify health check behavior after migration to avoid unexpected container restarts or routing issues.

2. Networking Shift

Kubernetes Ingress controllers and Services translate to Application Load Balancers and Target Groups. The concepts map roughly but configuration differs substantially.

Ingress rules defining host-based or path-based routing become ALB listener rules. Each routing configuration requires AWS-native setup through Terraform, CloudFormation, or console configuration.

Service discovery move away from Kubernetes DNS. Applications using Kubernetes service names for internal communication need updates to use AWS addressing instead. This often requires application code changes, not just configuration updates.

Network policies have no direct ECS equivalent. Teams relying on Kubernetes network policies must implement alternatives using security groups, which provide less granular control.

3. IAM Restructuring

IRSA (IAM roles for Service Accounts) configurations translate to ECS Task Roles. The mapping is conceptually similar but implementation differs.

Each Kubernetes service account with an IAM role becomes an ECS task role. The trust relationships change from IRSA to ECS service-based. Permission policies can often transfer directly, but the attachment mechanism requires reconfiguration.

Teams must audit existing IRSA configurations and create corresponding task roles. Applications referencing AWS credentials through service account annotations need updates to assume task role credentials instead. Testing IAM permissions thoroughly before production cutover prevents access-related outages.

4. Pipeline Changes

CI/CD pipelines targeting Kubernetes use kubectl or Helm for deployments. ECS deployments use AWS CLI commands or AWS-native deployment tools.

```

# Kubernetes deployment

kubectl apply -f deployment.yaml

# ECS deployment

aws ecs update-service --cluster my-cluster --service my-service --task-definition my-task:2

```

Pipeline scripts require rewriting using the AWS CLI. Teams using ArgoCD or Flux for GitOps must implement alternatives, typically CodePipeline, CodeDeploy, or custom scripts triggering ECS API calls.

Rollback procedures are also impacted. Kubernetes rollbacks use kubectl rollout commands or GitOps reconciliation, while ECS rollbacks require updating services to reference previous task definition revisions, which demands different automation scripts and runbooks.

The Hidden Risks of Downgrading

Migration solves an operational problem by changing infrastructure, and this approach carries strategic risks that may not be apparent during the migration decision.

1. Trading Standard Skills for Proprietary Ones

Kubernetes skills transfer across cloud providers and employers, as engineers experienced with EKS can work effectively on GKE, AKS, or self-managed clusters. The ecosystem knowledge applies broadly and is cloud-agnostic.

ECS expertise, on the contrary, applies only within AWS. Engineers learn AWS-specific APIs, tooling, and patterns that don't transfer. Organizations invest in skills with limited applicability.

This matters for hiring and retention, as the Kubernetes talent pool exceeds the ECS-specific pool, teams may find recruiting more difficult after migration.

2. Creating Future Migration Debt

Applications configured for ECS require significant work to move elsewhere. If business requirements eventually demand multi-cloud deployment, GCP expansion, or exit from AWS, the ECS-specific configurations become technical debt.

Kubernetes configurations would transfer with modest adaptation, while ECS configurations require complete reimplementation. The migration effort invested now may need repeating later under less favorable circumstances.

3. Losing Ecosystem Velocity

The Kubernetes ecosystem evolves rapidly. New tools, operators, and integrations appear continuously. Teams on Kubernetes benefit from community innovation continuously.

ECS improvements depend entirely on AWS development priorities. The pace of innovation is slower, and the direction aligns with AWS strategy rather than broader industry needs.

Qovery: The Third Way

The migration impulse often stems from a correct diagnosis, as teams correctly identify that EKS operational burden is too high. However, they incorrectly conclude that ECS is the only way to reduce it.

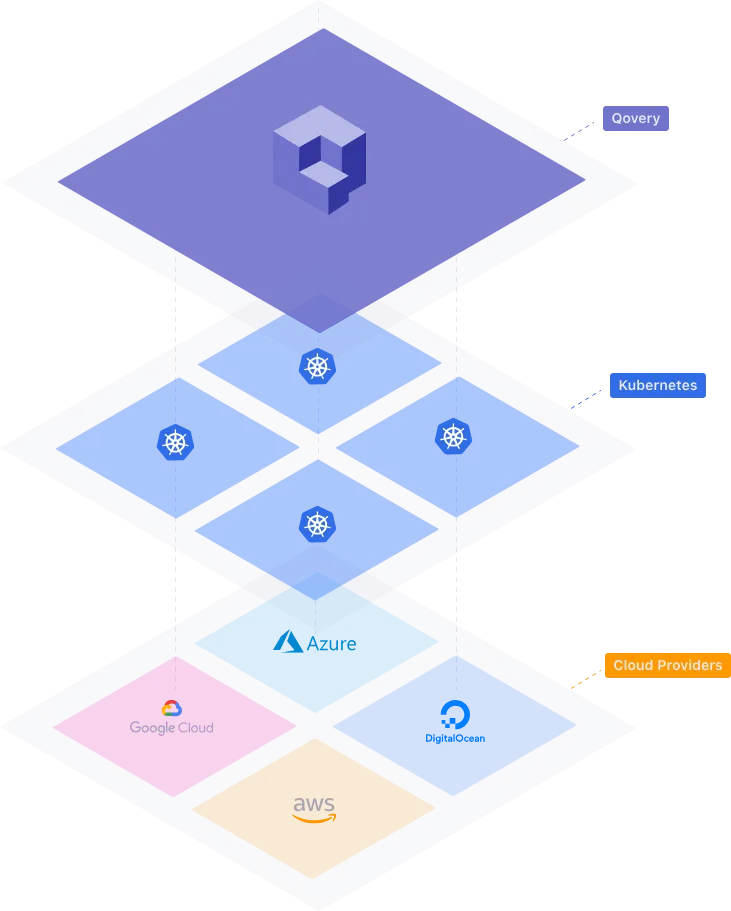

Qovery is a Kubernetes management platform that provides a third option: achieving the simplicity of ECS without sacrificing the power and ecosystem of EKS.

1. You Don't Hate EKS, You Hate Managing EKS

The frustration driving migration isn't with Kubernetes capabilities. It's with the operational toil of maintaining clusters, managing upgrades, and configuring networking. ECS attracts because it removes this toil, not because it provides better container orchestration.

Qovery removes the same toil while preserving EKS underneath. The platform handles version upgrades, networking configuration, and Day 2 operations automatically, while teams get the simplified experience they seek, all without downgrading infrastructure.

2. Abstraction Without Complexity

Qovery provides the user-friendly interface developers love, but deploys to your existing EKS cluster. Developers interact with a clean web interface or CLI rather than writing Kubernetes YAML or running kubectl commands.

The platform abstracts Kubernetes complexity without hiding it. Teams deploy applications through Git push or simple UI selections. Behind the scenes, Qovery generates the necessary Kubernetes resources, manages ingress configuration, and handles service discovery. The complexity exists but engineers don't interact with it unless they choose to.

This abstraction layer delivers the ECS-like experience that teams seek while preserving access to the full Kubernetes ecosystem. The abstraction simplifies common operations without limiting advanced use cases.

3. No Downgrade Needed

Existing EKS clusters can connect to Qovery directly. Teams preserve their current infrastructure while gaining operational simplicity by adding the Qovery abstraction.

Helm charts, existing configurations, and Kubernetes expertise remain valuable. The platform adds a management layer without requiring infrastructure replacement. The open standards and portability that made Kubernetes attractive stay intact.

Teams avoid the weeks of migration: no manifest conversion, networking reconfiguration, or pipeline rewrites. The complexity disappears without the effort of switching platforms.

4. Developer Autonomy

Developers gain self-service capabilities without needing Kubernetes knowledge. Ephemeral environments spin up for every pull request automatically, deployments trigger from Git without pipeline configuration, and rollbacks execute through the interface rather than kubectl commands.

This autonomy resembles what ECS promises but delivers on EKS infrastructure. Developers move fast while platform teams retain control over underlying Kubernetes clusters. The developer experience improves without sacrificing the infrastructure flexibility that enterprises require.

Conclusion

Moving from EKS to ECS solves an immediate operational headache by accepting long-term strategic debt. While ECS offers genuine simplicity for basic, single-cloud applications, the costs are high: vendor lock-in, a stagnant ecosystem, and lost engineering flexibility.

You don’t have to downgrade your infrastructure to find peace of mind. Before you commit weeks to rewriting your manifests and pipelines for ECS, try a "third way." Qovery gives you the developer experience and simplicity of ECS while keeping the power and portability of EKS. Stop the toil and keep the tech - try Qovery for free today.

Suggested articles

.webp)

.svg)

.svg)

.svg)